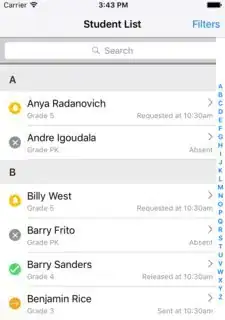

I'm working on a project to automatically rotate microscope image stacks of a fluid experiment so that they are lined up with images of the CAD template for the microfluidic chip. I am using the OpenCV package in Python for image processing. Having the correct rotational orientation is necessary so that the images can be masked properly for analysis. Our chips have markers filled with fluorescent dye that are visible in every frame. The template and a sample image look like the following (the template can be scaled to arbitrary size, but the relevant region of the images is typically ~100x100 pixels or so):

I have not been able to rotationally align the image to the CAD template. Typically, the misalignment between the CAD template and the images is less than a few degrees, which is still sufficient to interfere with analysis, so I need to be able to measure the rotational difference even if it is relatively small.

Following examples online I am using the following procedure:

- Scale up the image to approximately the same size as the template using cubic interpolation (~800 x 800)

- Threshold both images using Otsu's method

- Find keypoints and extract descriptors using a built-in method (I've tried ORB, AKAZE, and BRIEF).

- Match descriptors using a brute-force matcher with Hamming distance.

- Take the best matches and use them to compute a partial affine transformation matrix

- Use that matrix to infer a rotational shift, warping the one image to the other as a check.

Here's a sample of my code (borrowed in part from here):

import numpy as np

import cv2

import matplotlib.pyplot as plt

MAX_FEATURES = 500

GOOD_MATCH_PERCENT = 0.5

def alignImages(im1, im2,returnpoints=False):

# Detect ORB features and compute descriptors.

size1 = int(0.1*(np.mean(np.shape(im1))))

size2 = int(0.1*(np.mean(np.shape(im2))))

orb1 = cv2.ORB_create(MAX_FEATURES,edgeThreshold=size1,patchSize=size1)

orb2 = cv2.ORB_create(MAX_FEATURES,edgeThreshold=size2,patchSize=size2)

keypoints1, descriptors1 = orb1.detectAndCompute(im1, None)

keypoints2, descriptors2 = orb2.detectAndCompute(im2, None)

matcher = cv2.BFMatcher(cv2.NORM_HAMMING,crossCheck=True)

matches = matcher.match(descriptors1,descriptors2)

# Sort matches by score

matches.sort(key=lambda x: x.distance, reverse=False)

# Remove not so good matches

numGoodMatches = int(len(matches) * GOOD_MATCH_PERCENT)

matches = matches[:numGoodMatches]

# Draw top matches

imMatches = cv2.drawMatches(im1, keypoints1, im2, keypoints2, matches, None)

cv2.imwrite("matches.jpg", imMatches)

# Extract location of good matches

points1 = np.zeros((len(matches), 2), dtype=np.float32)

points2 = np.zeros((len(matches), 2), dtype=np.float32)

for i, match in enumerate(matches):

points1[i, :] = keypoints1[match.queryIdx].pt

points2[i, :] = keypoints2[match.trainIdx].pt

# Find homography

M, inliers = cv2.estimateAffinePartial2D(points1,points2)

height, width = im2.shape

im1Reg = cv2.warpAffine(im1,M,(width,height))

return im1Reg, M

if __name__ == "__main__":

test_template = cv2.cvtColor(cv2.imread("test_CAD_cropped.png"),cv2.COLOR_RGB2GRAY)

test_image = cv2.cvtColor(cv2.imread("test_CAD_cropped.png"),cv2.COLOR_RGB2GRAY)

fx = fy = 88/923

test_image_big = cv2.resize(test_image,(0,0),fx=1/fx,fy=1/fy,interpolation=cv2.INTER_CUBIC)

ret, imRef_t = cv2.threshold(test_template,0,255,cv2.THRESH_BINARY+cv2.THRESH_OTSU)

ret, test_big_t = cv2.threshold(test_image_big,0,255,cv2.THRESH_BINARY+cv2.THRESH_OTSU)

imReg, M = alignImages(test_big_t,imRef_t)

fig, ax = plt.subplots(nrows=2,ncols=2,figsize=(8,8))

ax[1,0].imshow(imReg)

ax[1,0].set_title("Warped Image")

ax[0,0].imshow(imRef_t)

ax[0,0].set_title("Template")

ax[0,1].imshow(test_big_t)

ax[0,1].set_title("Thresholded Image")

ax[1,1].imshow(imRef_t - imReg)

ax[1,1].set_title("Diff")

plt.show()

In this example, I get the following bad transformation because there are only 3 matching keypoints and they are all incorrect:

I find that regardless of my keypoint/descriptor parameters I tend to get too few "good" features. Is there anything I can do to pre-process my images better to get good features more reliably, or is there a better method to align my images to this template that doesn't involve keypoint matching? The specific application of this experiment means that I can't use the patented keypoint extractor/descriptors like SURF and SIFT.