I want to compile the TensorFlow Graph to Movidius Graph. I have used Model Zoo's ssd_mobilenet_v1_coco model to train it on my own dataset.

Then I ran

python object_detection/export_inference_graph.py \

--input_type=image_tensor \

--pipeline_config_path=/home/redtwo/nsir/ssd_mobilenet_v1_coco.config \

--trained_checkpoint_prefix=/home/redtwo/nsir/train/model.ckpt-3362 \

--output_directory=/home/redtwo/nsir/output

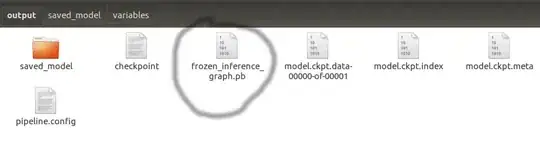

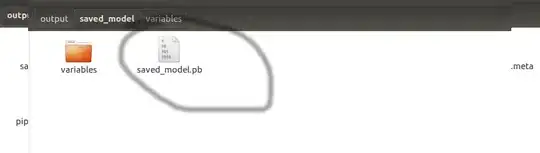

which generates me frozen_interference_graph.pb & saved_model/saved_model.pb

Now to convert this saved model into Movidius graph. There are commands given

Export GraphDef file

python3 ../tensorflow/tensorflow/python/tools/freeze_graph.py \

--input_graph=inception_v3.pb \

--input_binary=true \

--input_checkpoint=inception_v3.ckpt \

--output_graph=inception_v3_frozen.pb \

--output_node_name=InceptionV3/Predictions/Reshape_1

Freeze model for inference

python3 ../tensorflow/tensorflow/python/tools/freeze_graph.py \

--input_graph=inception_v3.pb \

--input_binary=true \

--input_checkpoint=inception_v3.ckpt \

--output_graph=inception_v3_frozen.pb \

--output_node_name=InceptionV3/Predictions/Reshape_1

which can finally be feed to NCS Intel Movidius SDK

mvNCCompile -s 12 inception_v3_frozen.pb -in=input -on=InceptionV3/Predictions/Reshape_1

All of this is given at Intel Movidius Website here: https://movidius.github.io/ncsdk/tf_modelzoo.html

My model was already trained i.e. output/frozen_inference_graph. Why do I again freeze it using /slim/export_inference_graph.py or it's the output/saved_model/saved_model.py that will go as input to slim/export_inference_graph.py??

All I want is output_node_name=Inceptionv3/Predictions/Reshape_1. How to get this output_name_name directory structure & anything inside it? I don't know what all it contains

what output node should I use for model zoo's ssd_mobilenet_v1_coco model(trained on my own custom dataset)

python freeze_graph.py \

--input_graph=/path/to/graph.pbtxt \

--input_checkpoint=/path/to/model.ckpt-22480 \

--input_binary=false \

--output_graph=/path/to/frozen_graph.pb \

--output_node_names="the nodes that you want to output e.g. InceptionV3/Predictions/Reshape_1 for Inception V3 "

Things I understand & don't understand: input_checkpoint: ✓ [check points that were created during training] output_graph: ✓ [path to output frozen graph] out_node_names: X

I don't understand out_node_names parameter & what should inside this considering its ssd_mobilnet not inception_v3

System information

- What is the top-level directory of the model you are using:

- Have I written custom code (as opposed to using a stock example script provided in TensorFlow):

- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): Linux Ubuntu 16.04

- TensorFlow installed from (source or binary): TensorFlow installed with pip

- TensorFlow version (use command below): 1.13.1

- Bazel version (if compiling from source):

- CUDA/cuDNN version: V10.1.168/7.*

- GPU model and memory: 2080Ti 11Gb

- Exact command to reproduce: