I am trying to use nvprof to monitor the performance of the GPU. I would like to know the time consuming of HtoD(host to device), DtoH(device to host) and device execution. It worked very well with a standard code from numba cuda website:

from numba import cuda

@cuda.jit

def add_kernel(x, y, out):

tx = cuda.threadIdx.x # this is the unique thread ID within a 1D block

ty = cuda.blockIdx.x # Similarly, this is the unique block ID within the 1D grid

block_size = cuda.blockDim.x # number of threads per block

grid_size = cuda.gridDim.x # number of blocks in the grid

start = tx + ty * block_size

stride = block_size * grid_size

# assuming x and y inputs are same length

for i in range(start, x.shape[0], stride):

out[i] = x[i] + y[i]

if __name__ == "__main__":

import numpy as np

n = 100000

x = np.arange(n).astype(np.float32)

y = 2 * x

out = np.empty_like(x)

threads_per_block = 128

blocks_per_grid = 30

add_kernel[blocks_per_grid, threads_per_block](x, y, out)

print(out[:10])

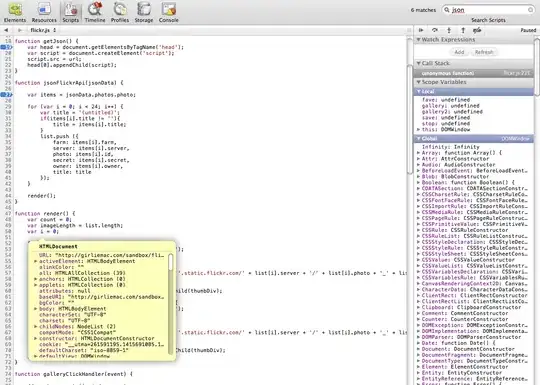

Here is the out come from nvprfo:

However, when I add the usage of multiprocessing with the following code:

import multiprocessing as mp

from numba import cuda

def fun():

@cuda.jit

def add_kernel(x, y, out):

tx = cuda.threadIdx.x # this is the unique thread ID within a 1D block

ty = cuda.blockIdx.x # Similarly, this is the unique block ID within the 1D grid

block_size = cuda.blockDim.x # number of threads per block

grid_size = cuda.gridDim.x # number of blocks in the grid

start = tx + ty * block_size

stride = block_size * grid_size

# assuming x and y inputs are same length

for i in range(start, x.shape[0], stride):

out[i] = x[i] + y[i]

import numpy as np

n = 100000

x = np.arange(n).astype(np.float32)

y = 2 * x

out = np.empty_like(x)

threads_per_block = 128

blocks_per_grid = 30

add_kernel[blocks_per_grid, threads_per_block](x, y, out)

print(out[:10])

return out

# check gpu condition

p = mp.Process(target = fun)

p.daemon = True

p.start()

p.join()

nvprof seems to monitor the process but it doesn't outcome anything though it reports that nvprof is profiling:

Furthermore, when I used Ray (a package for doing distributed computation):

if __name__ == "__main__":

import multiprocessing

def fun():

from numba import cuda

import ray

@ray.remote(num_gpus=1)

def call_ray():

@cuda.jit

def add_kernel(x, y, out):

tx = cuda.threadIdx.x # this is the unique thread ID within a 1D block

ty = cuda.blockIdx.x # Similarly, this is the unique block ID within the 1D grid

block_size = cuda.blockDim.x # number of threads per block

grid_size = cuda.gridDim.x # number of blocks in the grid

start = tx + ty * block_size

stride = block_size * grid_size

# assuming x and y inputs are same length

for i in range(start, x.shape[0], stride):

out[i] = x[i] + y[i]

import numpy as np

n = 100000

x = np.arange(n).astype(np.float32)

y = 2 * x

out = np.empty_like(x)

threads_per_block = 128

blocks_per_grid = 30

add_kernel[blocks_per_grid, threads_per_block](x, y, out)

print(out[:10])

return out

ray.shutdown()

ray.init(redis_address = "***")

out = ray.get(call_ray.remote())

# check gpu condition

p = multiprocessing.Process(target = fun)

p.daemon = True

p.start()

p.join()

nvprof doesn't show anything! It even doesn't show the line telling that nvprof is profiling the process (but the code is indeed executed):

Does anyone know how to figure this out? Or do I have any other choices to acquire these data for distributed computation?