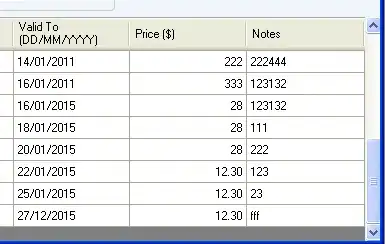

I have a dataset contains 'UI' (unique id), time, frequency (frequency for give value in UI column), as it is shown here:

What I would like to add a new column named 'daily_frequency' which simply counts each unique value in UI column for a given day sequentially as I show in the image below.

For example if UI=114737 and it is repeated 2 times in one day, we should have 1, and 2 in the daily_frequency column.

I could do that with Python and Panda package using group by and cumcount methods as follows ...

df['daily_frequency'] = df.groupby(['UI','day']).cumcount()+1

However, for some reason, I must do this via SQL queries (Amazon Redshift).