Im trying to get the per-pixel transformation to fit one image (+ background) to a result. Background Image + Input image should be transformed into desired result

to achieve this i am using the PyTorch gridsampler and autograd to optimize the grid. The transformed input will be added to the unchanged background.

ToTensor = torchvision.transforms.ToTensor()

FromTensor = torchvision.transforms.ToPILImage()

backround= ToTensor(Image.open("backround.png"))

pic = ToTensor(Image.open("pic.png"))

goal = ToTensor(Image.open("goal.png"))

empty = empty.expand(1, 3, empty.size()[1], empty.size()[2])

pic = pic.expand(1, 3, pic.size()[1], pic.size()[2])

goal = goal.expand(1, 3, goal.size()[1], goal.size()[2])

def createIdentityGrid(w, h):

grid = torch.zeros(1, w, h, 2);

for x in range(w):

for y in range(h):

grid[0][x][y][1] = 2 / w * (0.5 + x) - 1

grid[0][x][y][0] = 2 / h * (0.5 + y) - 1

return grid

w = 256; h=256 #hardcoded imagesize

grid = createIdentityGrid(w, h)

grid.requires_grad = True

for i in range(300):

goal_pred = torch.nn.functional.grid_sample(pic, grid)[0]

goal_pred = (empty + 0.75 * goal_pred).clamp(min=0, max=1)

out = goal_pred

loss = (goal_pred - goal).pow(2).sum()

loss.backward()

with torch.no_grad():

grid -= grid.grad * (1e-2)

grid.grad.zero_()

FromTensor(out[0]).show()

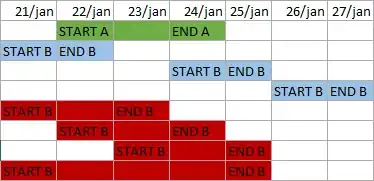

these are the result:

It is actualy working with this this easy example, but I observe some weird behaviour. The grid just starts to change on one side. Why is this so, and why doesn't the complete grid change instantly? Is there some obvious part I am missing?