I am trying to run a training job on Google Cloud ML Engine. I am submitting the job using

gcloud ml-engine jobs submit training `whoami`_object_detection_`date +%s` \

--job-dir=gs://${YOUR_GCS_BUCKET}/train \

--packages dist/object_detection-0.1.tar.gz,slim/dist/slim-0.1.tar.gz,/tmp/pycocotools/pycocotools-2.0.tar.gz \

--module-name object_detection.model_tpu_main \

--runtime-version 1.13 \

--scale-tier BASIC_TPU \

--region us-central1 \

-- \

--model_dir=gs://${YOUR_GCS_BUCKET}/train \

--tpu_zone us-central1 \

--pipeline_config_path=gs://${YOUR_GCS_BUCKET}/data/pipeline.config

However, after the job is created and all the required packages are installed, I start to repeatedly get these messages:

until the job fails with this output:

I have already tried this, this and this without any success.

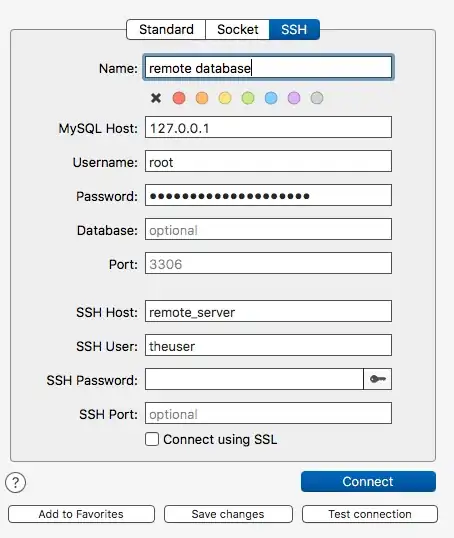

I suppose the problem is related to authentification, so I followed this tutorial, but that didn't help.

Any help is very appreciated!