I am currently learning for myself the concept of neural networks and I am working with the very good pdf from http://neuralnetworksanddeeplearning.com/chap1.html

There are also few exercises I did, but there is one exercise I really dont understand, at least one step

Task:

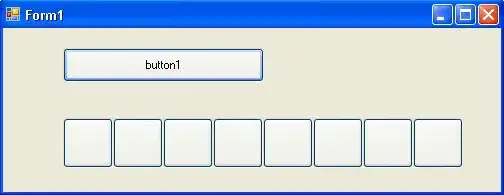

There is a way of determining the bitwise representation of a digit by adding an extra layer to the three-layer network above. The extra layer converts the output from the previous layer into a binary representation, as illustrated in the figure below. Find a set of weights and biases for the new output layer. Assume that the first 3 layers of neurons are such that the correct output in the third layer (i.e., the old output layer) has activation at least 0.99, and incorrect outputs have activation less than 0.01.

I found also the solution, as can be seen on the second image

I understand why the matrix has to have this shape, but I really struggle to understand the step, where the user calculates

0.99 + 3*0.01

4*0.01

I really don't understand these two steps. I would be very happy if someone can help me understand this calculation

Thank you very much for help