I read several materials about deep q-learning and I'm not sure if I understand it completely. From what I learned, it seems that Deep Q-learning calculates faster the Q-values rather than putting them on a table by using NN to perform a regression, calculating loss and backpropagating the error to update the weights. Then, in a testing scenario, it takes a state and the NN will return several Q-values for each action possible for that state. Then, the action with the highest Q-value will be chosen to be done at that state.

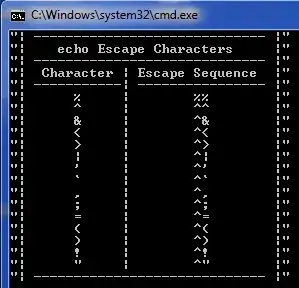

My only question is how the weights are updated. According to this site the weights are updated as follows:

I understand that the weights are initialized randomly, R is returned by the environment, gamma and alpha are set manually, but I dont understand how Q(s',a,w) and Q(s,a,w) are initialized and calculated. Does it seem that we should build a table of Q-values and update them as with Q-learning or they are calculated automatically at each NN training epoch? what I am not understanding here? can somebody explain to me better such an equation?