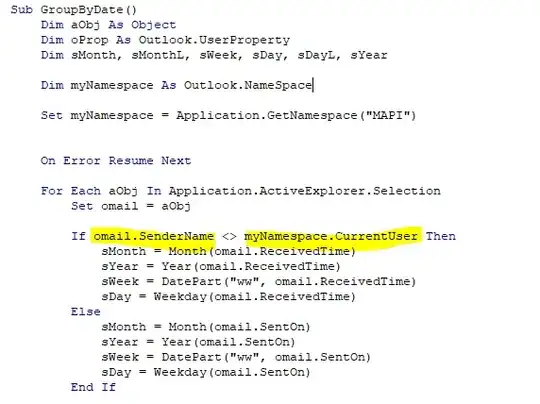

I am trying to fit curve to a step function. I tried no. of approaches like using sigmoid function,using ratio of polynomials, fitting Gauss function to derivative of step, but none of them are looking okay. Now, I came up with the idea of creating a perfect step and compute convolution of perfect step to a Gauss function and find best fit parameter using non-linear regression. But this is also not looking good. I am writing here code both for Sigmoid and convolution approach. First with Sigmoid Function fit:

Function:

function d=fit_sig(param,x,y)

a=param(1);

b=param(2);

d=(a./(1+exp(-b*x)))-y;

end

main code:

a=1, b=0.09;

p0=[a,b];

sig_coff=lsqnonlin(@fit_sig,p0,[],[],[],xavg_40s1,havg_40s1);

% Plot the original and experimental data.

sig_new = sig_coff(1)./(1+exp(-sig_coff(2)*xavg_40s1));

d= havg_40s1-step_new;

figure;

plot(xavg_40s1,havg_40s1,'.-r',xavg_40s1,sig_new,'.-b');

xlabel('x-pixel'); ylabel('dz/dx (mm/pixel)'); axis square;

This is not working at all. I think my initial guesses are wrong. I tried multiple numbers but could not get it correct. I tried using curve fitting tool too but that is also not working.

Code for creating perfect step:

h=ones(1,numel(havg_40s1)); %height=1mm

h(1:81)=-0.038;

h(82:end)=1.002; %or 1.0143

figure;

plot(xavg_40s1,havg_40s1,'k.-', 'linewidth',1.5, 'markersize',16);

hold on

plot(xavg_40s1,h,'.-r','linewidth',1.5,'markersize',12);

Code using convolution approach:

Function:

function d=fit_step(param,h,x,y)

A=param(1);

mu=param(2);

sigma=param(3);

d=conv(h,A*exp(-((x-mu)/sigma).^2),'same')-y;

end

main code:

param1=[0.2247 8.1884 0.0802];

step_coff=lsqnonlin(@fit_step,param1,[],[],[],h,dx_40s1,havg_40s1);

% Plot the original and experimental data.

step_new = conv(h,step_coff(1)*exp(-((dx_40s1-step_coff(2))/step_coff(3)).^2),'same');

figure;

plot(xavg_40s1,havg_40s1,'.-r',xavg_40s1,step_new,'.-b');

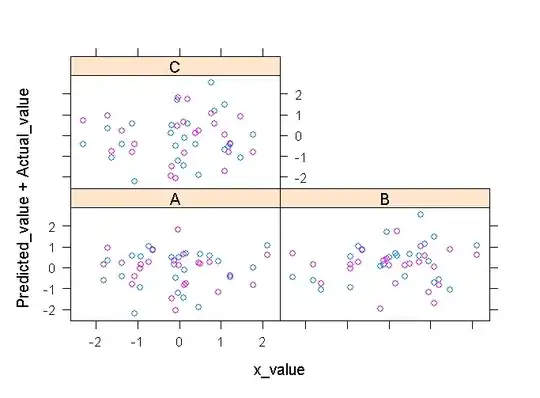

This is close but edge of step has been shifted plus corners are looking sharper than measured step.

Could someone please help me out the best way to fit a step function or any suggestion to improve the code??

X data:

12.6400 12.6720 12.7040 12.7360 12.7680 12.8000 12.8320 12.8640 12.8960 12.9280 12.9600 12.9920 13.0240 13.0560 13.0880 13.1200 13.1520 13.1840 13.2160 13.2480 13.2800 13.3120 13.3440 13.3760 13.4080 13.4400 13.4720 13.5040 13.5360 13.5680 13.6000 13.6320 13.6640 13.6960 13.7280 13.7600 13.7920 13.8240 13.8560 13.8880 13.9200 13.9520 13.9840 14.0160 14.0480 14.0800 14.1120 14.1440 14.1760 14.2080 14.2400 14.272 14.3040 14.3360 14.3680 14.4000 14.4320 14.4640 14.4960 14.5280 14.5600 14.5920 14.6240 14.6560 14.6880 14.7200 14.7520 14.7840 14.8160 14.8480 14.8800 14.9120 14.9440 14.9760 15.0080 15.0400 15.0720 15.1040 15.1360 15.1680 15.2000 15.2320 15.2640 15.2960 15.3280 15.3600 15.3920 15.4240 15.4560 15.4880 15.5200 15.5520 15.5840 15.6160 15.6480 15.6800 15.7120 15.7440 15.7760 15.8080 15.8400 15.8720 15.9040 15.9360 15.9680 16.0000 16.0320 16.0640 16.0960 16.1280 16.1600 16.1920 16.2240 16.2560 16.2880 16.3200 16.3520 16.3840 16.4160 16.4480 16.4800 16.5120 16.5440 16.5760 16.6080 16.6400 16.6720 16.7040 16.7360 16.7680 16.8000 16.8320 16.8640 16.8960 16.9280 16.9600 16.9920 17.0240 17.0560 17.0880 17.1200 17.1520 17.1840 17.2160 17.2480 17.2800 17.3120 17.3440 17.3760 17.4080 17.4400 17.4720 17.5040 17.5360 17.5680 17.6000 17.6320 17.6640 17.6960 17.7280 17.7600

Y Data:

-0.0404 -0.0405 -0.0350 -0.0406 -0.0412 -0.0407 -0.0378 -0.0405 -0.0337 -0.0417 -0.0413 -0.0387 -0.0352 -0.0373 -0.0369 -0.0388 -0.0384 -0.0351 -0.0401 -0.0314 -0.0375 -0.0390 -0.0330 -0.0343 -0.0341 -0.0369 -0.0424 -0.0369 -0.0309 -0.0387 -0.0346 -0.0433 -0.0410 -0.0355 -0.0343 -0.0396 -0.0369 -0.0400 -0.0377 -0.0330 -0.0416 -0.0348 -0.0380 -0.0338 -0.0349 -0.0359 -0.0418 -0.0336 -0.0375 -0.0309 -0.0362 -0.0422 -0.0437 -0.0352 -0.0303 -0.0335 -0.0358 -0.0467 -0.0341 -0.0306 -0.0322 -0.0338 -0.0418 -0.0417 -0.0299 -0.0264 -0.0308 -0.0352 -0.0330 -0.0261 -0.0088 -0.0071 0.0013 0.0012 0.0151 0.0352 0.0475 0.0764 0.1423 0.2617 0.4057 0.6241 0.8076 0.8872 0.9248 0.9340 0.9395 0.9514 0.9650 0.9708 0.9875 0.9852 0.9955 0.9971 0.9966 0.9981 0.9983 0.9932 1.0013 1.0011 0.9961 1.0044 0.9994 1.0028 1.0028 0.9996 1.0009 1.0024 1.0027 1.0075 1.0017 1.0001 1.0033 1.0062 1.0071 1.0032 1.0026 1.0027 1.0062 1.0063 0.9981 1.0025 0.9994 1.0075 1.0026 1.0035 1.0018 0.9999 1.0045 1.0067 0.9980 1.0044 0.9976 0.9976 1.0087 1.0026 1.0010 0.9997 1.0025 0.9943 1.0098 0.9964 0.9994 0.9973 0.9997 1.0084 1.0035 0.9974 0.9967 0.9967 1.0013 1.0060 1.0026 0.9960 0.9970 0.9987 1.0054 1.0048 0.9952 0.9937 0.9972

Attached are the images of the measured step and fitted curve.