I have a timeout problem with my site hosted on Kubernetes cluster provided by DigitalOcean.

u@macbook$ curl -L fork.example.com

curl: (7) Failed to connect to fork.example.com port 80: Operation timed out

I have tried everything listed on the Debug Services page. I use a k8s service named df-stats-site.

u@pod$ nslookup df-stats-site

Server: 10.245.0.10

Address: 10.245.0.10#53

Name: df-stats-site.deepfork.svc.cluster.local

Address: 10.245.16.96

It gives the same output when I do it from node:

u@node$ nslookup df-stats-site.deepfork.svc.cluster.local 10.245.0.10

Server: 10.245.0.10

Address: 10.245.0.10#53

Name: df-stats-site.deepfork.svc.cluster.local

Address: 10.245.16.96

With the help of Does the Service work by IP? part of the page, I tried the following command and got the expected output.

u@node$ curl 10.245.16.96

*correct response*

Which should mean that everything is fine with DNS and service. I confirmed that kube-proxy is running with the following command:

u@node$ ps auxw | grep kube-proxy

root 4194 0.4 0.1 101864 17696 ? Sl Jul04 13:56 /hyperkube proxy --config=...

But I have something wrong with iptables rules:

u@node$ iptables-save | grep df-stats-site

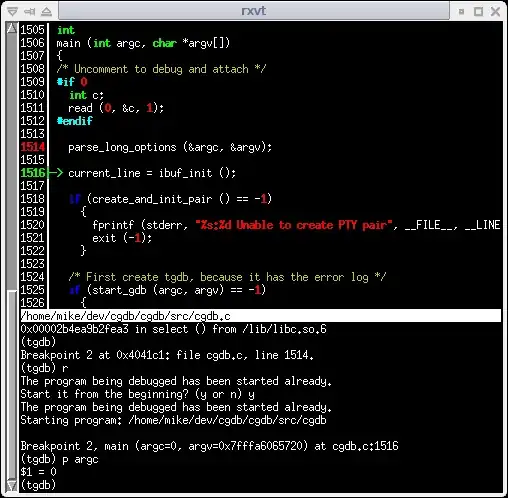

(unfortunately, I was not able to copy the output from node, see the screenshot below)

It is recommended to restart kube-proxy with with the -v flag set to 4, but I don't know how to do it with DigitalOcean provided cluster.

That's the configuration I use:

apiVersion: v1

kind: Service

metadata:

name: df-stats-site

spec:

ports:

- port: 80

targetPort: 8002

selector:

app: df-stats-site

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: df-stats-site

annotations:

kubernetes.io/ingress.class: nginx

certmanager.k8s.io/cluster-issuer: letsencrypt-prod

spec:

tls:

- hosts:

- fork.example.com

secretName: letsencrypt-prod

rules:

- host: fork.example.com

http:

paths:

- backend:

serviceName: df-stats-site

servicePort: 80

Also, I have a NGINX Ingress Controller set up with the help of this answer.

I must note that it worked fine before. I'm not sure what caused this, but restarting the cluster would be great, though I don't know how to do it without removing all the resources.