As noticed in the original paper Atomic Decomposition by Basis

Pursuit about Basis Pursuit (BP), the BP can be equivalently reformulated as linear programming (LP) problem, and LP has developed very efficient solvers.

In this se.math site there is a discussion and a formula for standard form of LP problem given BP problem. Recall that in BP, you are looking for x with shape (x_dim, ) such that A@x=y and x is minimal regards to L1 norm. Thus, you need just a script which takes array A with shape (y_dim, x_dim), y with shape (y_dim, ) and returns standard form.

Here is the function which takes BP problem and returns optimal x using standard form for LP (I have written it in such way so that it fits to the terminology used in that tutorial). The script uses scipy.optimize.linprog as a backend for LP.

import numpy as np

from scipy.optimize import linprog

def get_optimal_x_for_bp(A, y):

x_dim, y_dim = A.shape[1], y.shape[0]

eye = np.eye(x_dim)

obj = np.concatenate([np.zeros(x_dim), np.ones(x_dim)])

lhs_ineq = np.concatenate([np.concatenate([eye, -eye], axis=1), np.concatenate([-eye, -eye], axis=1)], axis=0)

rhs_ineq = np.zeros(2 * x_dim)

lhs_eq = np.concatenate([A, np.zeros((y_dim, x_dim))], axis=1)

rhs_eq = y

bnd = [*((None, None) for _ in range(x_dim)), *((0, None) for _ in range(x_dim))]

res = linprog(c=obj, A_ub=lhs_ineq, b_ub=rhs_ineq, A_eq=lhs_eq, b_eq=rhs_eq, bounds=bnd, method="revised simplex")

return res.x[:x_dim]

You can check that it works properly on the example 2x_1-x_2=2:

if __name__ == '__main__':

A = np.array([[2, -1]])

y = np.array([2])

x = get_optimal_x_for_bp(A, y)

print(x)

Output: [1. 0.]

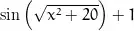

The output says that x_1=1, x_2=0 is the optimal x. It is true as you can see from the following image:

You can also apply it to some more complex problems:

if __name__ == '__main__':

x_dim, y_dim = 6, 4

A = np.random.rand(y_dim, x_dim)

y = np.random.rand(y_dim)

x = get_optimal_x_for_bp(A, y)

print(x)

Output: [ 0. 1.55953519 0.50597071 0. 0.1724968 -1.86814744]

Just keep in mind, that as it is written in the original paper about BP, the system A@x=y should be overcomplete.