Can we resize an image from 64x64 to 256x256 without affecting the resolution is that a way to add zero on new row and column in the new resized output I m working on vgg and I get an error while adding my 64x64 input image because vggface is a pertrained model that include an input size of 224

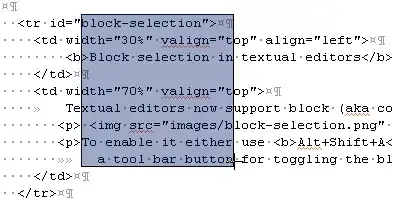

code:

from keras.models import Model, Sequential

from keras.layers import Input, Convolution2D, ZeroPadding2D, MaxPooling2D, Flatten, Dense, Dropout, Activation

from PIL import Image

import numpy as np

from keras.preprocessing.image import load_img, save_img, img_to_array

from keras.applications.imagenet_utils import preprocess_input

from keras.preprocessing import image

import matplotlib

matplotlib.use('TkAgg')

import matplotlib.pyplot as plt

# from sup5 import X_test, Y_test

from sklearn.metrics import roc_curve, auc

from keras.models import Model, Sequential

from keras.layers import Input, Convolution2D, ZeroPadding2D, MaxPooling2D, Flatten, Dense, Dropout, Activation

from PIL import Image

import numpy as np

from keras.preprocessing.image import load_img, save_img, img_to_array

from keras.applications.imagenet_utils import preprocess_input

from keras.preprocessing import image

import matplotlib.pyplot as plt

# from sup5 import X_test, Y_test

from sklearn.metrics import roc_curve, auc

from keras.applications.vgg16 import VGG16

from keras.preprocessing import image

from keras.applications.vgg16 import preprocess_input

import numpy as np

model = VGG16(weights='imagenet', include_top=False)

from keras.models import model_from_json

vgg_face_descriptor = Model(inputs=model.layers[0].input

, outputs=model.layers[-2].output)

# import pandas as pd

# test_x_predictions = deep.predict(X_test)

# mse = np.mean(np.power(X_test - test_x_predictions, 2), axis=1)

# error_df = pd.DataFrame({'Reconstruction_error': mse,

# 'True_class': Y_test})

# error_df.describe()

from PIL import Image

def preprocess_image(image_path):

img = load_img(image_path, target_size=(224, 224))

img = img_to_array(img)

img = np.expand_dims(img, axis=0)

img = preprocess_input(img)

return img

def findCosineSimilarity(source_representation, test_representation):

a = np.matmul(np.transpose(source_representation), test_representation)

b = np.sum(np.multiply(source_representation, source_representation))

c = np.sum(np.multiply(test_representation, test_representation))

return 1 - (a / (np.sqrt(b) * np.sqrt(c)))

def findEuclideanDistance(source_representation, test_representation):

euclidean_distance = source_representation - test_representation

euclidean_distance = np.sum(np.multiply(euclidean_distance, euclidean_distance))

euclidean_distance = np.sqrt(euclidean_distance)

return euclidean_distance

vgg_face_descriptor = Model(inputs=model.layers[0].input, outputs=model.layers[-2].output)

# for encod epsilon = 0.004

epsilon = 0.16

# epsilon = 0.095

retFalse,ret_val, euclidean_distance = verifyFace(str(i)+"test.jpg", str(j)+"train.jpg", epsilon)

verifyFace1(str(i) + "testencod.jpg", str(j) + "trainencod.jpg")

Error : ValueError: operands could not be broadcast together with remapped shapes [original->remapped]: (512,14,14)->(512,newaxis,newaxis) (14,14,512)->(14,newaxis,newaxis) and requested shape (14,512)