I am trying to remove duplicates from MongoDB but all solutions find fail. Given the current JSON structure:

{

"_id": { "$oid": "5cee31bbca8a185b76a692db" },

"date": { "$date": "2018-10-07T19:11:38.000Z" },

"id": "1049014405130858496",

"username": "chrisoldcorn",

"text": "“The #UK can rest now. The Orange Buffoon is back in his xenophobic #WhiteHouse!” #news #politics #trump #populist #uspoli #ukpolitics #ukpoli #london #scotland #TrumpBaby #usa #america #canada #eu #europe #brexit #maga #msm #gop #elections #election2018 https://medium.com/@chrisoldcorn/trump-babys-uk-visit-a-reflection-1c2aa4ad942 …pic.twitter.com/Y6Yihs9g6K",

"retweets": 1,

"favorites": 0,

"mentions": "@chrisoldcorn",

"hashtags": "#UK #WhiteHouse #news #politics #trump #populist #uspoli #ukpolitics #ukpoli #london #scotland #TrumpBaby #usa #america #canada #eu #europe #brexit #maga #msm #gop #elections #election2018",

"geo": "",

"replies": 0,

"to": null,

"lan": "en"

}

I need to remove all duplicates based on field "id" in the file.

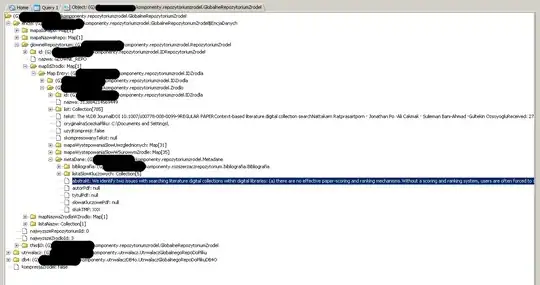

I have tried db.tweets.ensureIndex( { id:1 }, { unique:true, dropDups:true } ) but I am not sure this is the correct way. I obtain this output:

Can anyone help me?