I want to extract the text on Labels from the images. The images are coloured and are in a real-life environment. PFA images. Sample Image

I have tried multiple solutions:

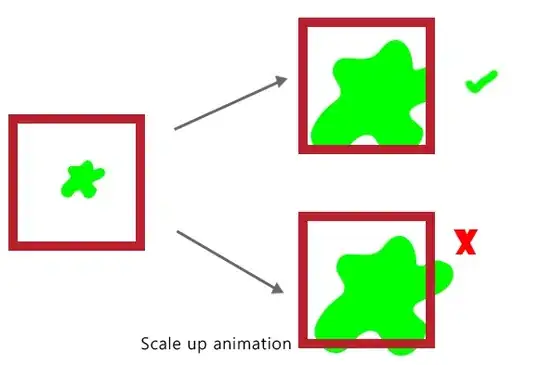

- I'm able to read text from flat images using Tesseract but it's not working if the text is at a certain angle.

- Tried a lot of image pre-processing converting it to Binary and grayscale but not able to extract the required text.

- Since the above step failed I was not able to de-skew the text either.

image = cv2.imread("p18-73.png",0)

thresh = cv2.adaptiveThreshold(image,255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY, 11,2)

coords = np.column_stack(np.where(thresh > 0))

angle = cv2.minAreaRect(coords)[-1]

The above pre-processing code is not working. Can you please tell me what is the best way to approach this image?