Let's assume we have 10 nodes, each of which have 2 cores. We set our defaultParallelism to 2*10=20 hoping that each node will be assigned exactly 2 partitions if we call sc.parallelize(1 to 20). This assumption, for some reason, turns out to be incorrect in some cases. Depending on some conditions Spark sometimes places more than 2 partitions onto a single node, sometimes skipping one or many nodes altogether. This causes serious skew and repartitioning doesn't help (as we have no control over the placement of partitions onto physical nodes).

- Why can this happen?

- How to make sure each node gets assigned exactly 2 partitions?

Also, spark.locality.wait is set to 999999999s for what it's worth.

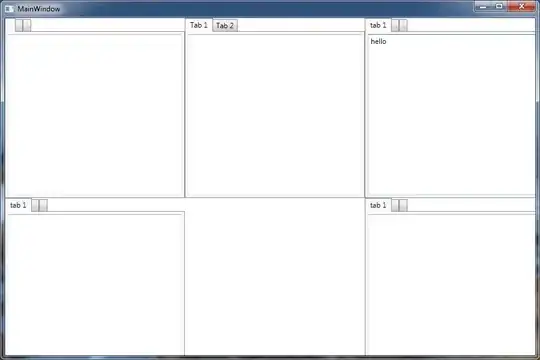

The DAG in which this happens is given below. While the parallelise from stage 0 assigns partitions evenly, the parallelise in stage 1 does not. It is always like this - why?

Linking related question.