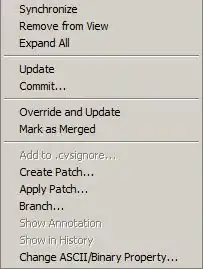

To implement some post processing effects, I want to render the scene into a texture instead of directly render to the screen. For testing purposes I have drawn this texture over a simple quad that spans the whole screen. But the image quality is disappointing:

Render Scene To Screen

VS

Render Scene To Texture

I really do not know, why this is happening. The canvas size fits to the drawing buffer size. Also, when resizing the screen I create a completely new texture, frame & render buffer. Is my framebuffer setup incorrect? Or it is a WebGL limitation? I'm using Google Chrome & WebGL2.

function resize() {

if (tex) { tex.delete(); }

if (fb) { gl.deleteFramebuffer(fb); }

if (rb) { gl.deleteRenderbuffer(rb); }

fb = gl.createFramebuffer();

gl.bindFramebuffer(gl.FRAMEBUFFER, fb);

tex = new Texture2D(null, {

internalFormat: gl.RGB,

width: gl.drawingBufferWidth,

height: gl.drawingBufferHeight,

minFilter: gl.LINEAR,

magFilger: gl.LINEAR

});

gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.COLOR_ATTACHMENT0, gl.TEXTURE_2D, tex.id, 0);

rb = gl.createRenderbuffer();

gl.bindRenderbuffer(gl.RENDERBUFFER, rb);

gl.renderbufferStorage(gl.RENDERBUFFER, gl.DEPTH24_STENCIL8, gl.drawingBufferWidth, gl.drawingBufferHeight);

gl.framebufferRenderbuffer(gl.FRAMEBUFFER, gl.DEPTH_STENCIL_ATTACHMENT, gl.RENDERBUFFER, rb);

gl.bindFramebuffer(gl.FRAMEBUFFER, null);

}

run() {

gl.resize();

gl.addEventListener('resize', resize);

resize();

gl.blendFuncSeparate(gl.SRC_ALPHA, gl.ONE_MINUS_SRC_ALPHA, gl.ONE, gl.ONE);

gl.clear(gl.COLOR_BUFFER_BIT | gl.DEPTH_BUFFER_BIT);

gl.clearColor(0.0, 0.0, 0.0, 1.0);

gl.enable(gl.DEPTH_TEST);

gl.enable(gl.CULL_FACE);

gl.viewport(0, 0, gl.drawingBufferWidth, gl.drawingBufferHeight);

gl.loop(() => {

if (!ctx.scene || !ctx.car || !ctx.wheels) return;

gl.bindFramebuffer(gl.FRAMEBUFFER, fb);

gl.clear(gl.COLOR_BUFFER_BIT | gl.DEPTH_BUFFER_BIT);

renderScene();

gl.bindFramebuffer(gl.FRAMEBUFFER, null);

gl.clear(gl.COLOR_BUFFER_BIT | gl.DEPTH_BUFFER_BIT);

screenShader.use();

screen.bind();

screenShader.screenMap = tex.active(0);

gl.drawElements(gl.TRIANGLES, screen.length, gl.UNSIGNED_SHORT, 0);

});

}