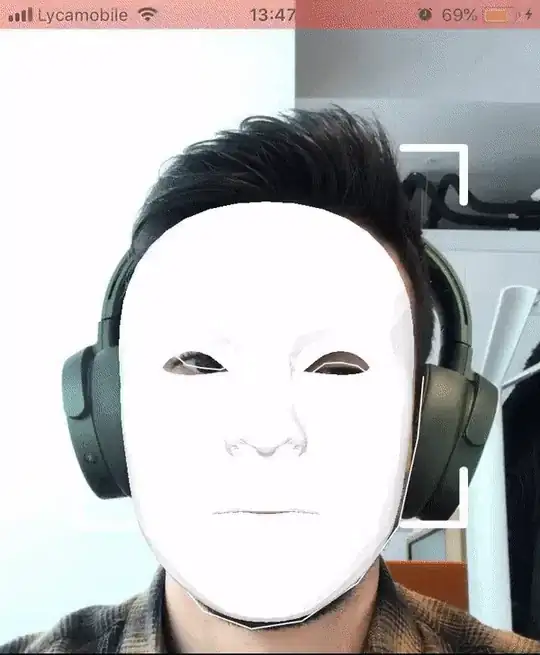

With iPhoneX True-Depth camera its possible to get the 3D Coordinates of any object and use that information to position and scale the object, but with older iPhones we don't have access to AR on front-face camera, what i've done so far was detecting the face using Apple Vison frame work and drawing some 2D paths around the face or landmarks. i've made a SceneView and Applied that as front layer of My view with clear background, and beneath it is AVCaptureVideoPreviewLayer , after detecting the face my 3D Object appears on the screen but positioning and scaling it correctly according to the face boundingBox required unprojecting and other stuffs which i got stuck there, i've also tried converting the 2D BoundingBox to 3D using CATransform3D but i failed! i am wondering if what i want to achieve is even possible ? i remember SnapChat was doing this before ARKit was available on iPhone if i'm not wrong!

override func viewDidLoad() {

super.viewDidLoad()

self.view.addSubview(self.sceneView)

self.sceneView.frame = self.view.bounds

self.sceneView.backgroundColor = .clear

self.node = self.scene.rootNode.childNode(withName: "face",

recursively: true)!

}

fileprivate func updateFaceView(for result:

VNFaceObservation, twoDFace: Face2D) {

let box = convert(rect: result.boundingBox)

defer {

DispatchQueue.main.async {

self.faceView.setNeedsDisplay()

}

}

faceView.boundingBox = box

self.sceneView.scene?.rootNode.addChildNode(self.node)

let unprojectedBox = SCNVector3(box.origin.x, box.origin.y,

0.8)

let worldPoint = sceneView.unprojectPoint(unprojectedBox)

self.node.position = worldPoint

/* Here i have to to unprojecting

to convert the value from a 2D point to 3D point also

issue here. */

}