I would detach the processing part from your shiny app, to keep it responsive (R is single threaded).

Here is an example which continuously writes to a file in a background R process created via library(callr). You can then read in the current state of the file via reactiveFileReader.

Edit: if you want to start the file processing session-wise just place the r_bg() call inside the server function (see my comment). Furthermore, the processing currently is done row-wise. In your actual code you should consider processing the data batch-wise instead (n rows, what ever is reasonable for your code)

library(shiny)

library(callr)

processFile <- function(){

filename <- "output.txt"

if(!file.exists(filename)){

file.create(filename)

}

for(i in 1:24){

d = runif(1)

Sys.sleep(.5)

write.table(d, file = filename, append = TRUE, row.names = FALSE, col.names = FALSE)

}

return(NULL)

}

# start background R session ----------------------------------------------

rx <- r_bg(processFile)

# create shiny app --------------------------------------------------------

ui <- fluidPage(

titlePanel("reactiveFileReader"),

sidebarLayout(

sidebarPanel(

),

mainPanel(

uiOutput("table")

)

)

)

server <- function(input, output, session){

# rx <- r_bg(processFile) # if you want to start the file processing session-wise

readOutput <- function(file){

if(file.exists(file)){

tableData <- tryCatch({read.table(file)}, error=function(e){e})

if (inherits(tableData, 'error')){

tableData = NULL

} else {

tableData

}

} else {

tableData = NULL

}

}

rv <- reactiveFileReader(intervalMillis = 100, session, filePath = "output.txt", readFunc = readOutput)

output$table = renderTable({

rv()

})

session$onSessionEnded(function() {

file.remove("output.txt")

})

}

shinyApp(ui, server)

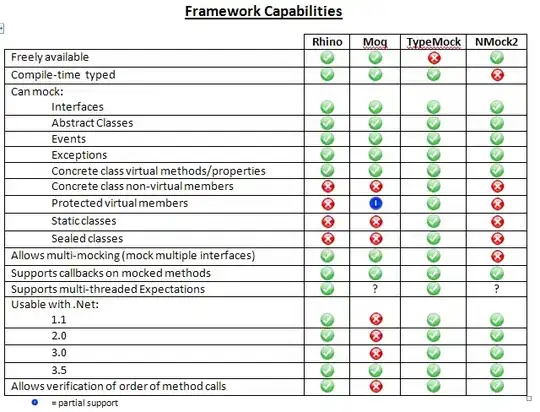

As an alternative approach I'd recommend library(ipc) which lets you set up continuous communication between R processes. Also check my answer here on async progressbars.

Result using library(callr):

Result using library(promises): (code by @antoine-sac) - blocked shiny session

Edit: Here is another approach utilizing library(ipc)

This avoids using reactiveFileReader and therefore no file handling is required in the code:

library(shiny)

library(ipc)

library(future)

library(data.table)

plan(multiprocess)

ui <- fluidPage(

titlePanel("Inter-Process Communication"),

sidebarLayout(

sidebarPanel(

textOutput("random_out"),

p(),

actionButton('run', 'Start processing')

),

mainPanel(

tableOutput("result")

)

)

)

server <- function(input, output) {

queue <- shinyQueue()

queue$consumer$start(100)

result_row <- reactiveVal()

observeEvent(input$run,{

future({

for(i in 1:10){

Sys.sleep(1)

result <- data.table(t(runif(10, 1, 10)))

queue$producer$fireAssignReactive("result_row", result)

}

})

NULL

})

resultDT <- reactiveVal(value = data.table(NULL))

observeEvent(result_row(), {

resultDT(rbindlist(list(resultDT(), result_row())))

})

random <- reactive({

invalidateLater(200)

runif(1)

})

output$random_out <- renderText({

paste("Something running in parallel", random())

})

output$result <- renderTable({

req(resultDT())

})

}

shinyApp(ui = ui, server = server)

To clean up the discussion I've had with @antoine-sac for future readers:

On my machine using his code I was indeed experiencing a direct interconnection between the long running code (sleep time) and the blocked UI:

However, the reason for this was not that forking is more expensive depending on the OS or using docker as @antoine-sac stated - the problem was a lack of available workers. As stated in ?multiprocess:

workers: A positive numeric scalar or a function specifying the

maximum number of parallel futures that can be active at the same time

before blocking.

The default is determined via availableCores() - although on a windows machine plan(multiprocess) defaults to multisession evaluation.

Accordingly the discussion was triggered by a lack of configuration and different defaults used due to the underlying hardware.

Here is the code to reproduce the gif (based on @antoine-sac's first contribution):

library(shiny)

library(future)

library(promises)

plan(multiprocess)

# plan(multiprocess(workers = 10))

ui <- fluidPage(

titlePanel("title"),

sidebarLayout(

sidebarPanel(

p(textOutput("random")),

p(numericInput("sleep", "Sleep time", value = 5)),

p((actionButton(inputId = "button", label = "make table"))),

htmlOutput("info")

),

mainPanel(

uiOutput("table")

)

)

)

makeTable <- function(nrow, input){

filename <- tempfile()

file.create(filename)

for (i in 1:nrow) {

future({

# expensive operation here

Sys.sleep(isolate(input$sleep))

matrix(c(i, runif(10)), nrow = 1)

}) %...>%

as.data.frame() %...>%

readr::write_csv(path = filename, append = TRUE)

}

reactiveFileReader(intervalMillis = 100, session = NULL,

filePath = filename,

readFunc = readr::read_csv, col_names = FALSE)

}

server <- function(input, output, session){

timingInfo <- reactiveVal()

output$info <- renderUI({ timingInfo() })

output$random <- renderText({

invalidateLater(100)

paste("Something running in parallel: ", runif(1))

})

table_reader <- eventReactive(input$button, {

start <- Sys.time()

result <- makeTable(10, input)

end <- Sys.time()

duration <- end-start

duration_sleep_diff <- duration-input$sleep

timingInfo(p("start:", start, br(), "end:", end, br(), "duration:", duration, br(), "duration - sleep", duration_sleep_diff))

return(result)

})

output$table = renderTable(table_reader()()) # nested reactives, double ()

}

shinyApp(ui, server)