I'm trying to fetch some data from Cloudera's Quick Start Hadoop distribution (a Linux VM for us) on our SAP HANA database using SAP Spark Controller. Every time I trigger the job in HANA, it gets stuck and I see the following warning being logged continuously every 10-15 seconds in SPARK Controller's log file, unless I kill the job.

WARN org.apache.spark.scheduler.cluster.YarnScheduler: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

Although it's logged like a warning it looks like it's a problem that prevents the job from executing on Cloudera. From what I read, it's either an issue with the resource management on Cloudera, or an issue with blocked ports. In our case we don't have any blocked ports so it must be the former.

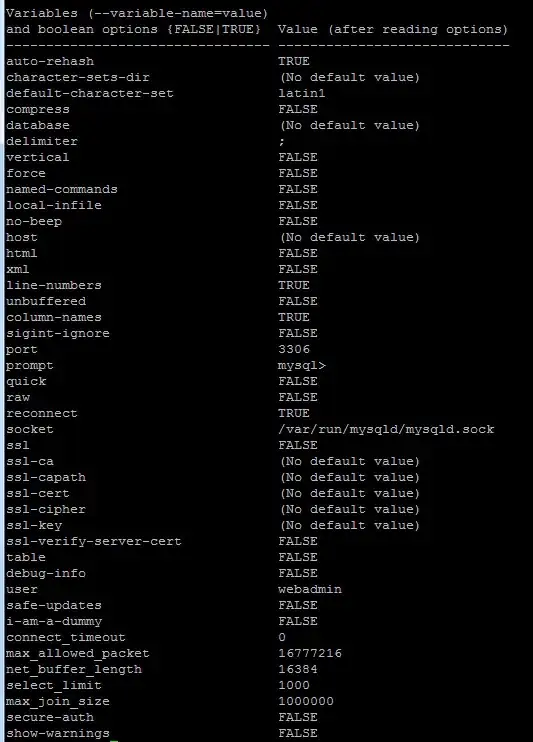

Our Cloudera is running a single node and has 16GB RAM with 4 CPU cores.

Looking at the overall configuration I have a bunch of warnings, but I can't determine if they are relevant to the issue or not.

Here's also how the RAM is distributed on Cloudera

It would be great if you can help me pinpoint the cause for this issue because I've been trying various combinations of things over the past few days without any success.

Thanks, Dimitar