Disclaimer: This is just a suggestion on how you may approach this problem. There might be better alternatives.

I think, it might be helpful to take into consideration the relationship between elapsed-time-since-posting and the final-score. The following curve from [OC] Upvotes over time for a reddit post models the behavior of the final-score or total-upvotes-count in time:

The curve obviously relies on the fact that once a post is online, you expect somewhat linear ascending upvotes behavior that slowly converges/ stabilizes around a maximum (and from there you have a gentle/flat slope).

Moreover, we know that usually the number of votes/comments is ascending in function of time. the relationship between these elements can be considered to be a series, I chose to model it as a geometric progression (you can consider arithmetic one if you see it is better). Also, you have to keep in mind that you are counting some elements twice; Some users commented and upvoted so you counted them twice, also some can comment multiple times but can upvote only one time. I chose to consider that only 70% (in code p = 0.7) of the users are unique commenters and that users who commented and upvoted represent 60% (in code e = 1-0.6 = 0.4)of the the total number of users (commenters and upvoters), the result of these assumptions:

So we have two equation to model the score so you can combine them and take their average. In code this would look like this:

import warnings

import numpy as np

import matplotlib.pyplot as plt

from scipy.optimize import curve_fit

from mpl_toolkits.mplot3d import axes3d

# filter warnings

warnings.filterwarnings("ignore")

class Cfit:

def __init__(self, votes, comments, scores, fit_size):

self.votes = votes

self.comments = comments

self.scores = scores

self.time = 60 # prediction time

self.fit_size = fit_size

self.popt = []

def func(self, x, a, d, q):

e = 0.4

b = 1

p = 0.7

return (a * np.exp( 1-(b / self.time**d )) + q**self.time * e * (x + p*self.comments[:len(x)]) ) /2

def fit_then_predict(self):

popt, pcov = curve_fit(self.func, self.votes[:self.fit_size], self.scores[:self.fit_size])

return popt, pcov

# init

init_votes = np.array([3, 1, 2, 1, 0])

init_comments = np.array([0, 3, 0, 1, 64])

final_scores = np.array([26, 12, 13, 14, 229])

# fit and predict

cfit = Cfit(init_votes, init_comments, final_scores, 15)

popt, pcov = cfit.fit_then_predict()

# plot expectations

fig = plt.figure(figsize = (15,15))

ax1 = fig.add_subplot(2,3,(1,3), projection='3d')

ax1.scatter(init_votes, init_comments, final_scores, 'go', label='expected')

ax1.scatter(init_votes, init_comments, cfit.func(init_votes, *popt), 'ro', label = 'predicted')

# axis

ax1.set_xlabel('init votes count')

ax1.set_ylabel('init comments count')

ax1.set_zlabel('final score')

ax1.set_title('fincal score = f(init votes count, init comments count)')

plt.legend()

# evaluation: diff = expected - prediction

diff = abs(final_scores - cfit.func(init_votes, *popt))

ax2 = fig.add_subplot(2,3,4)

ax2.plot(init_votes, diff, 'ro', label='fit: a=%5.3f, d=%5.3f, q=%5.3f' % tuple(popt))

ax2.grid('on')

ax2.set_xlabel('init votes count')

ax2.set_ylabel('|expected-predicted|')

ax2.set_title('|expected-predicted| = f(init votes count)')

# plot expected and predictions as f(init-votes)

ax3 = fig.add_subplot(2,3,5)

ax3.plot(init_votes, final_scores, 'gx', label='fit: a=%5.3f, d=%5.3f, q=%5.3f' % tuple(popt))

ax3.plot(init_votes, cfit.func(init_votes, *popt), 'rx', label='fit: a=%5.3f, d=%5.3f, q=%5.3f' % tuple(popt))

ax3.set_xlabel('init votes count')

ax3.set_ylabel('final score')

ax3.set_title('fincal score = f(init votes count)')

ax3.grid('on')

# plot expected and predictions as f(init-comments)

ax4 = fig.add_subplot(2,3,6)

ax4.plot(init_votes, final_scores, 'gx', label='fit: a=%5.3f, d=%5.3f, q=%5.3f' % tuple(popt))

ax4.plot(init_votes, cfit.func(init_votes, *popt), 'rx', label='fit: a=%5.3f, d=%5.3f, q=%5.3f' % tuple(popt))

ax4.set_xlabel('init comments count')

ax4.set_ylabel('final score')

ax4.set_title('fincal score = f(init comments count)')

ax4.grid('on')

plt.show()

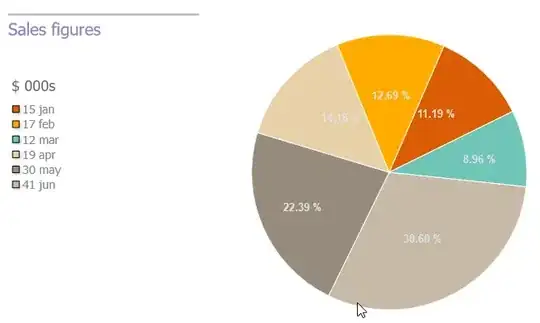

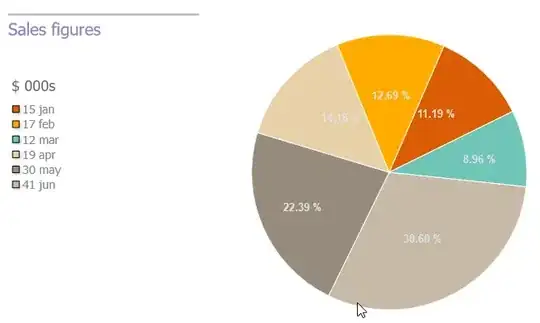

The output of the previous code is the following:

Well obviously the provided data-set is too small to evaluate any approach so it is up to you to test this more.

Well obviously the provided data-set is too small to evaluate any approach so it is up to you to test this more.

The main idea here is that you assume your data to follow a certain function/behavior (described in func) but you give it certain degrees of freedom (your parameters: a, d, q), and using curve_fit you try to approximate the best combination of these variables that will fit your input data to your output data. Once you have the returned parameters from curve_fit (in code popt) you just run your function using those parameters, like this for example (add this section at the end of the previous code):

# a function similar to func to predict scores for a certain values

def score(votes_count, comments_count, popt):

e, b, p = 0.4, 1, 0.7

a, d, q = popt[0], popt[1], popt[2]

t = 60

return (a * np.exp( 1-(b / t**d )) + q**t * e * (votes_count + p*comments_count )) /2

print("score for init-votes = 2 & init-comments = 0 is ", score(2, 0, popt))

Output:

score for init-votes = 2 & init-comments = 0 is 14.000150386210994

You can see that this output is close to the correct value 13 and hopefully with more data you can have better/ more accurate approximations of your parameters and consequently better "predictions".