I am doing car tracking on a video. I am trying to determine how many meters it traveled.

I randomly pulled 7 points from a video frame. I made point1 as my origin

Then on the corresponding Google Maps perspective, I calcculated the distances of the 6 points from the orgin (delta x and delta y)

Then I ran the following

pts_src = np.array([[417, 285], [457, 794], [1383, 786], [1557, 423], [1132, 296], [759, 270], [694, 324]])

pts_dst = np.array([[0,0], [-3, -31], [30, -27], [34, 8], [17, 15], [8, 7], [6, 1]])

h, status = cv2.findHomography(pts_src, pts_dst)

a = np.array([[1032, 268]], dtype='float32')

a = np.array([a])

# finally, get the mapping

pointsOut = cv2.perspectiveTransform(a, h)

When I tested the mapping of point 7, the results are wrong.

Am I missing anything? Or am I using the wrong method? Thank you

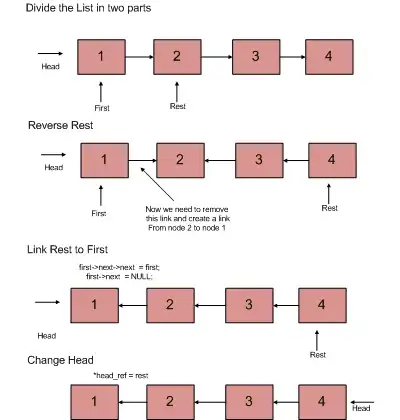

Here is the image from the video

I have marked the points and here is the mapping

The x,y column represent the pixels on the image. The metered column represent the distance from the the origin to the point in meters. I basically, usging google maps, converted the geo code to UTM and calculated the x and the y difference.

I tried to input the 7th point and I got [[[14.682752 9.927497]]] as output which is quite far in the x axis.

Any idea if I am doing anything wrong?