Recently, I upgrade Airflow from 1.9 to 1.10.3 (latest one).

However I do notice a performance issue related to SubDag concurrency. Only 1 task inside the SubDag can be picked up, which is not the way it should be, our concurrency setting for the SubDag is 8.

See the following:

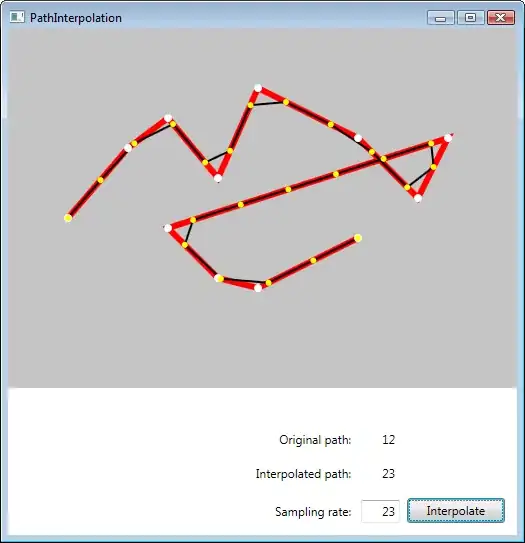

get_monthly_summary-214 and get_monthly_summary-215 are the two SubDags, it can be run in parallel controller by the parent dag concurrency

But when zoom into the SubDag say get_monthly_summary-214, then

You can definitely see that there is only 1 task running at a time, the others are queued, and it keep running in this way. When we check the SubDag concurrency, it is actually 8 as we specified in the code:

You can definitely see that there is only 1 task running at a time, the others are queued, and it keep running in this way. When we check the SubDag concurrency, it is actually 8 as we specified in the code:

We do setup the pool slots size, it is 32, We do have 8 celery workers to pick up the queued task, and our airflow config associate with the concurrency is as follows:

# The amount of parallelism as a setting to the executor. This defines

# the max number of task instances that should run simultaneously

# on this airflow installation

parallelism = 32

# The number of task instances allowed to run concurrently by the scheduler

dag_concurrency = 16

# The app name that will be used by celery

celery_app_name = airflow.executors.celery_executor

# The concurrency that will be used when starting workers with the

# "airflow worker" command. This defines the number of task instances that

# a worker will take, so size up your workers based on the resources on

# your worker box and the nature of your tasks

worker_concurrency = 16

Also all the SubDag are configured using the queue called mini, while all its inner tasks are the default queue called default, since we might some deadlock problems before if we running both SubDag operator and SubDag inner tasks on the same queue. I also tried to use the default queue for all the tasks and operators, it does not help.

The old version 1.9 seems to be fine that each SubDag can execute multiple tasks in parallel, did we miss anything ?