What is modern best practice for multi-configuration builds (with Jenkins)?

I want to support multiple branches and multiple configurations.

For example for each version V1, V2 of the software I want builds targeting platforms P1 and P2.

We have managed to set up multi-branch declarative pipelines. Each build has its own docker so its easy to support multiple platforms.

pipeline {

agent none

stages {

stage('Build, test and deploy for P1) {

agent {

dockerfile {

filename 'src/main/docker/Jenkins-P1.Dockerfile'

}

}

steps {

sh buildit...

}

}

stage('Build, test and deploy for P2) {

agent {

dockerfile {

filename 'src/main/docker/Jenkins-P2.Dockerfile'

}

}

steps {

sh buildit...

}

}

}

}

This gives one job covering multiple platforms but there is no separate red/blue status for each platform. There is good argument that this does not matter as you should not release unless the build works for all platforms.

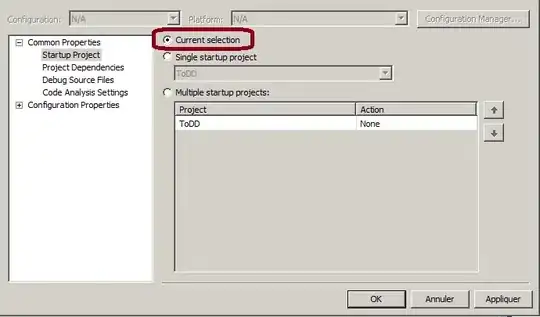

However, I would like a separate status indicator for each configuration. This suggests I should use a multi-configuration build which triggers a parameterised build for each configuration as below (and the linked question):

pipeline {

parameters {

choice(name: 'Platform',choices: ['P1', 'P2'], description: 'Target OS platform', )

}

agent {

filename someMagicToGetDockerfilePathFromPlatform()

}

stages {

stage('Build, test and deploy for P1) {

steps {

sh buildit...

}

}

}

}

There are several problems with this:

- A declarative pipeline has more constraints over how it is scripted

- Multi-configuration builds cannot trigger declarative pipelines (even with the parameterized triggers plugin I get "project is not buildable").

This also begs the question what use are parameters in declarative pipelines?

Is there a strategy that gives the best of both worlds i.e:

- pipeline as code

- separate status indicators

- limited repetition?