The method provided in your link does work from my testing, (I am using LAG instead of LEAD for your tasks though). Here I am not looking to replicate that method, just another option for you by using JavaScript to build the logic which you might also extend to other applications:

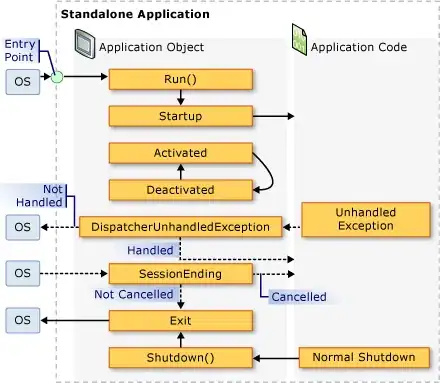

In the testing below (tested on PDI-8.0), the transformation has 5 steps, see below

- Data Grid step to create testing data with three fields:

date, account number and amount

- Sort rows to sort the rows based on

account number and date. this is required for Analytic Query step, if your source data are already sorted, then skip this step

- Analytic Query step, see below, create two more fields:

prev_date and prev_amount

Modified Java Script Value step, add the following code, nothing else is needed to configure in this step:

var days_diff = dateDiff(prev_date, date, "d")

if (days_diff > 0) {

/* retrieve index for two fields: 'date', 'amount'

* and modify their values accordingly

*/

var idx_date = getInputRowMeta().indexOfValue("date")

var idx_amount = getInputRowMeta().indexOfValue("amount")

/* amount to increment by each row */

var delta_amount = (amount - prev_amount)/days_diff

for (var i = 1; i < days_diff; i++) {

newRow = createRowCopy(getOutputRowMeta().size());

newRow[idx_date] = dateAdd(prev_date, "d", i);

newRow[idx_amount] = prev_amount + delta_amount * i;

putRow(newRow);

}

}

Select values step to remove unwanted fields, i.e.: prev_date, prev_amount

Run the transformation, you will have the following shown under the Preview data tab of Modified Java Script Value step:

UPDATE:

Per your comments, you can do the following, assume you have a new field account_type:

in Analytic Query step, add a new field prev_account_type, similar to two other prev_ fields, just from different Subject: account_type

in Modified Java Script Value step, you need to retrieve the Row index for account_type and modify the logic to compute delta_amount, so when prev_account_type is not the same as the current account_type, the delta_amount is ZERO, see below code:

var days_diff = dateDiff(prev_date, date, "d")

if (days_diff > 0) {

/* retrieve index for three fields: 'date', 'amount', 'account_type' */

var idx_date = getInputRowMeta().indexOfValue("date")

var idx_amount = getInputRowMeta().indexOfValue("amount")

var idx_act_type = getInputRowMeta().indexOfValue("account_type")

/* amount to increment by each row */

var delta_amount = prev_account_type.equals(account_type) ? (amount - prev_amount)/days_diff : 0;

/* copy the current Row into newRow and modify fields accordingly */

for (var i = 1; i < days_diff; i++) {

newRow = createRowCopy(getOutputRowMeta().size());

newRow[idx_date] = dateAdd(prev_date, "d", i);

newRow[idx_amount] = prev_amount + delta_amount * i;

newRow[idx_act_type] = prev_account_type;

putRow(newRow);

}

}

Note: invoking Javascript interpreter does have some performance hit, so if that matters to you, stick to the method in the link you provided.