I'm trying to build a decision tree, and found the following code online.

My question is:

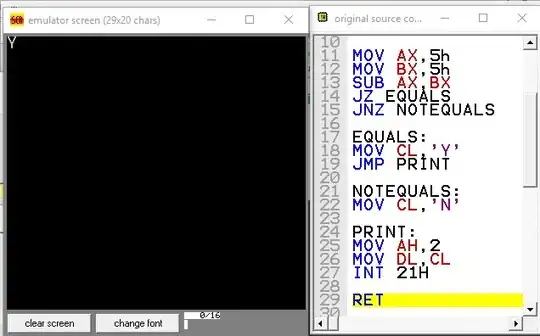

What is clf.score(X_train,Y_train) evaluate for in decision tree? The output is in the following screenshot, I'm wondering what is that value for?

clf = DecisionTreeClassifier(max_depth=3).fit(X_train,Y_train) print("Training:"+str(clf.score(X_train,Y_train))) print("Test:"+str(clf.score(X_test,Y_test))) pred = clf.predict(X_train)Output:

And in the following code, I think it calculates several scores for the model. With higher max_depth I set, the score increase. That's easy to understand for me. However, I'm wondering what the difference between these number and the value for Training and Test in the previous screenshot?

- My goal is to predict house price whether it's over 20k or not. Which score I should consider when choose the best-fit and simple model?