I have a service running on a system with 16GB RAM with following configurations :

-Xms6144M"

-Xmx6144M

-XX:+UseG1GC

-XX:NewSize=1500M

-XX:NewSize=1800M

-XX:MaxNewSize=2100M

-XX:NewRatio=2

-XX:SurvivorRatio=12

-XX:MaxGCPauseMillis=100

-XX:MaxGCPauseMillis=1000

-XX:GCTimeRatio=9

-XX:-UseAdaptiveSizePolicy

-XX:+PrintAdaptiveSizePolicy

It has around 20 pollers running each having ThreadPoolExecutor of size 30 for processing messages. Initially for around 5-6 hours it was able to process around 130 messages per second. Thereafter it was able to process around only 40 messages per second.

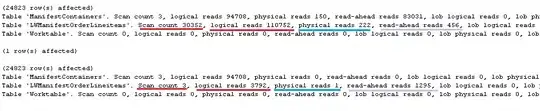

I analyzed GC logs to find out that Full GC became very frequent and more than 1000MB data was getting promoted from Young to Old Generation:

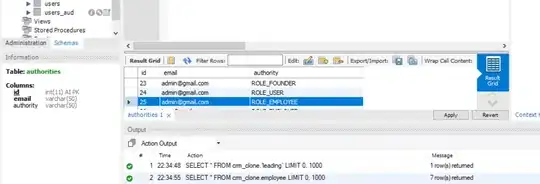

Looking at the Heap Dump I see lots of thread in Waiting state similar to this : WAITING at sun.misc.Unsafe.park(Native Method) And following classes objects acquiring most retained size :

I think there may be small Memory leak in service and its associated libraries which is getting accumulated over time so increasing Heap size will only postpone this. Or may be as the Full GC have become very frequent all other threads are getting stopped very frequently ("stop the world" pauses). Need help to figure out the root cause of this behaviour.