When using EmpiricalCovariance to develop a covariance matrix for high-dimensional data, I would expect the diagonal of this matrix (from the top-left to the bottom-right) to be all ones, as of course a variable is always going to perfectly correlate to itself. However, this is not the case. Why not?

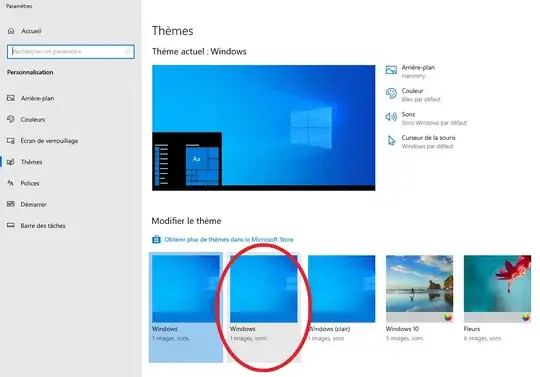

Here is an example, plotted with a seaborns heatmap:

As you can see, the diagonal is lighter than most of the data, however it's not as light as the lightest point.