I am using Python with OpenCV 3.4.

I have a system composed of 2 cameras that I want to use to track an object and get its trajectory, then its speed.

I am currently able to calibrate intrinsically and extrinsically each of my cameras. I can track my object through the video and get the 2d coordinates in my video plan.

My problem now is that I would like to project my points from my both 2D plan into 3D points.

I've tried functions as triangulatePoints but it seems it's not working in a proper way.

Here is my actual function to get 3d coords. It returns some coordinates that seems a little bit off compared to the actual coordinates

def get_3d_coord(left_two_d_coords, right_two_d_coords):

pt1 = left_two_d_coords.reshape((len(left_two_d_coords), 1, 2))

pt2 = right_two_d_coords.reshape((len(right_two_d_coords), 1, 2))

extrinsic_left_camera_matrix, left_distortion_coeffs, extrinsic_left_rotation_vector, \

extrinsic_left_translation_vector = trajectory_utils.get_extrinsic_parameters(

1)

extrinsic_right_camera_matrix, right_distortion_coeffs, extrinsic_right_rotation_vector, \

extrinsic_right_translation_vector = trajectory_utils.get_extrinsic_parameters(

2)

#returns arrays of the same size

(pt1, pt2) = correspondingPoints(pt1, pt2)

projection1 = computeProjMat(extrinsic_left_camera_matrix,

extrinsic_left_rotation_vector, extrinsic_left_translation_vector)

projection2 = computeProjMat(extrinsic_right_camera_matrix,

extrinsic_right_rotation_vector, extrinsic_right_translation_vector)

out = cv2.triangulatePoints(projection1, projection2, pt1, pt2)

oc = []

for idx, elem in enumerate(out[0]):

oc.append((out[0][idx], out[1][idx], out[2][idx], out[3][idx]))

oc = np.array(oc, dtype=np.float32)

point3D = []

for idx, elem in enumerate(oc):

W = out[3][idx]

obj = [None] * 4

obj[0] = out[0][idx] / W

obj[1] = out[1][idx] / W

obj[2] = out[2][idx] / W

obj[3] = 1

pt3d = [obj[0], obj[1], obj[2]]

point3D.append(pt3d)

return point3D

Here are some screenshot of the 2d trajectory that I get for both my cameras :

Here are some screenshot of the 3d trajectory that we get for the same camera.

As you can see the 2d trajectory doesn't look as the 3d one, and I am not able to get a accurate distance between two points. I just would like getting real coordinates, it means knowing the (almost) exact real distance walked by a person even in a curved road.

EDIT to add reference data and examples

Here is some example and input data to reproduce the problem. First, here are some data. 2D points for camera1

546,357

646,351

767,357

879,353

986,360

1079,365

1152,364

corresponding 2D for camera2

236,305

313,302

414,308

532,308

647,314

752,320

851,323

3D points that we get from triangulatePoints

"[0.15245444, 0.30141047, 0.5444277]"

"[0.33479974, 0.6477136, 0.25396818]"

"[0.6559921, 1.0416716, -0.2717265]"

"[1.1381898, 1.5703914, -0.87318224]"

"[1.7568599, 1.9649554, -1.5008119]"

"[2.406788, 2.302272, -2.0778883]"

"[3.078426, 2.6655817, -2.6113863]"

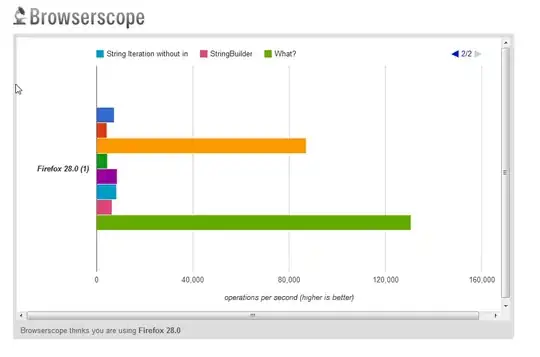

In these following images, we can see the 2d trajectory (top line) and the 3d projection reprojected in 2d (bottom line). Colors are alternating to show which 3d points correspond to 2d point.

And finally here are some data to reproduce.

camera 1 : camera matrix

5.462001610064596662e+02 0.000000000000000000e+00 6.382260289544193483e+02

0.000000000000000000e+00 5.195528638702176067e+02 3.722480290221320161e+02

0.000000000000000000e+00 0.000000000000000000e+00 1.000000000000000000e+00

camera 2 : camera matrix

4.302353276501239066e+02 0.000000000000000000e+00 6.442674231451971991e+02

0.000000000000000000e+00 4.064124751062329324e+02 3.730721752718034736e+02

0.000000000000000000e+00 0.000000000000000000e+00 1.000000000000000000e+00

camera 1 : distortion vector

-1.039009381799949928e-02 -6.875769941694849507e-02 5.573643708806085006e-02 -7.298826373638074051e-04 2.195279856716004369e-02

camera 2 : distortion vector

-8.089289768586239993e-02 6.376634681503455396e-04 2.803641672679824115e-02 7.852965318823987989e-03 1.390248981867302919e-03

camera 1 : rotation vector

1.643658457134109296e+00

-9.626823326237364531e-02

1.019865700311696488e-01

camera 2 : rotation vector

1.698451227150894471e+00

-4.734769748661146055e-02

5.868343803315514279e-02

camera 1 : translation vector

-5.004031689969588026e-01

9.358682517577661120e-01

2.317689087311113116e+00

camera 2 : translation vector

-4.225788801112133619e+00

9.519952012307866251e-01

2.419197507326224184e+00

camera 1 : object points

0 0 0

0 3 0

0.5 0 0

0.5 3 0

1 0 0

1 3 0

1.5 0 0

1.5 3 0

2 0 0

2 3 0

camera 2 : object points

4 0 0

4 3 0

4.5 0 0

4.5 3 0

5 0 0

5 3 0

5.5 0 0

5.5 3 0

6 0 0

6 3 0

camera 1 : image points

5.180000000000000000e+02 5.920000000000000000e+02

5.480000000000000000e+02 4.410000000000000000e+02

6.360000000000000000e+02 5.910000000000000000e+02

6.020000000000000000e+02 4.420000000000000000e+02

7.520000000000000000e+02 5.860000000000000000e+02

6.500000000000000000e+02 4.430000000000000000e+02

8.620000000000000000e+02 5.770000000000000000e+02

7.000000000000000000e+02 4.430000000000000000e+02

9.600000000000000000e+02 5.670000000000000000e+02

7.460000000000000000e+02 4.430000000000000000e+02

camera 2 : image points

6.080000000000000000e+02 5.210000000000000000e+02

6.080000000000000000e+02 4.130000000000000000e+02

7.020000000000000000e+02 5.250000000000000000e+02

6.560000000000000000e+02 4.140000000000000000e+02

7.650000000000000000e+02 5.210000000000000000e+02

6.840000000000000000e+02 4.150000000000000000e+02

8.400000000000000000e+02 5.190000000000000000e+02

7.260000000000000000e+02 4.160000000000000000e+02

9.120000000000000000e+02 5.140000000000000000e+02

7.600000000000000000e+02 4.170000000000000000e+02