I read this article about how backpropagation works, and I understood everything they said. They said that to find the gradient we have to take a partial derivative of the cost function to each weight/bias. However, to explain this they used a network which had one node per layer. How do you do backpropagation for a network which has more than one node per layer?

1 Answers

I haven't checked too thoroughly on the math I put forward here, so if anyone sees an error here, please correct me!

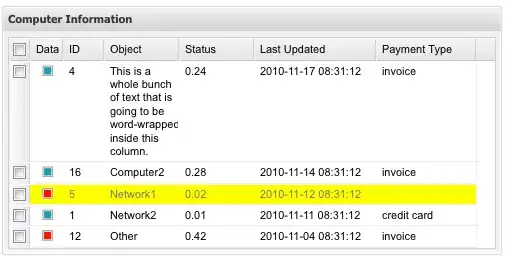

Anyways, the image down here is a very simple example of backpropagation. As you can see, we're interested in the gradients of the Loss function L (in this case the loss function is extremely simple and not good outside this example) with respect to the weights W in order to update the weights according to the gradient descent optimizer (there are other, better optimizers, but gradient descent is the easiest one to understand, so I suggest you read up on it). I guess the key to your understanding is the first equation in the box, where you can see that you first use the chain rule and then sum up all the gradients this gives you.

For further understanding, I suggest that you write up all your equations for forward propagation, and then calculate the chain rule for dL/dW and dL/da at each layer. It might also be easier if you break up the equations even further and set a = f(z), z = W * X (in order to make the chain rule more intuitive; i.e. dL/dW = dL/da*da/dz*dz/dW). There are also multiple guides out there that you can read up on for further understanding.

- 2,220

- 23

- 32

-

You said Loss = output of a1 + output of a2 – Arjun Apr 22 '19 at 18:57

-

How would you do this if there were 2 nodes in the first layer? – Arjun Apr 22 '19 at 18:59

-

Nothing really changes if there's 2 nodes in the first layer. We are only calculating the gradient w.r.t. each individual weight. You can think of it as optimizing each weight individually (independent of all other weights) w.r.t. the loss function. Also, the equations above are with scalars. In a real setting, these would be matrix equations. – Andreas Forslöw Apr 22 '19 at 19:18