The object detection api used tf-slim to build the models. Tf-slim is a tensorflow api that contains a lot of predefined CNNs and it provides building blocks of CNN.

In object detection api, the CNNs used are called feature extractors, there are wrapper classes for these feature extractors and they provided a uniform interface for different model architectures.

For example, model faster_rcnn_resnet101 used resnet101 as a feature extractor, so there is a corresponding FasterRCNNResnetV1FeatureExtractor wrapper class in file faster_rcnn_resnet_v1_feature_extractor.py under the models directory.

from nets import resnet_utils

from nets import resnet_v1

slim = tf.contrib.slim

In this class, you will find that they used slim to build the feature extractors. nets is a module from slim that contains a lot of predefined CNNs. So regarding your model defining code (layers), you should be able to find it in the nets module, here is resnet_v1 class.

def resnet_v1_block(scope, base_depth, num_units, stride):

"""Helper function for creating a resnet_v1 bottleneck block.

Args:

scope: The scope of the block.

base_depth: The depth of the bottleneck layer for each unit.

num_units: The number of units in the block.

stride: The stride of the block, implemented as a stride in the last unit.

All other units have stride=1.

Returns:

A resnet_v1 bottleneck block.

"""

return resnet_utils.Block(scope, bottleneck, [{

'depth': base_depth * 4,

'depth_bottleneck': base_depth,

'stride': 1

}] * (num_units - 1) + [{

'depth': base_depth * 4,

'depth_bottleneck': base_depth,

'stride': stride

}])

def resnet_v1_50(inputs,

num_classes=None,

is_training=True,

global_pool=True,

output_stride=None,

spatial_squeeze=True,

store_non_strided_activations=False,

min_base_depth=8,

depth_multiplier=1,

reuse=None,

scope='resnet_v1_50'):

"""ResNet-50 model of [1]. See resnet_v1() for arg and return description."""

depth_func = lambda d: max(int(d * depth_multiplier), min_base_depth)

blocks = [

resnet_v1_block('block1', base_depth=depth_func(64), num_units=3,

stride=2),

resnet_v1_block('block2', base_depth=depth_func(128), num_units=4,

stride=2),

resnet_v1_block('block3', base_depth=depth_func(256), num_units=6,

stride=2),

resnet_v1_block('block4', base_depth=depth_func(512), num_units=3,

stride=1),

]

return resnet_v1(inputs, blocks, num_classes, is_training,

global_pool=global_pool, output_stride=output_stride,

include_root_block=True, spatial_squeeze=spatial_squeeze,

store_non_strided_activations=store_non_strided_activations,

reuse=reuse, scope=scope)

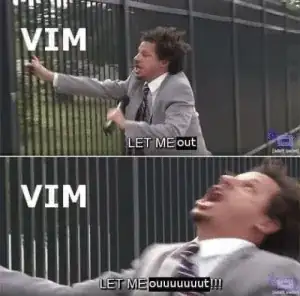

The example code above explained how a resnet50 model is built (Choose resnet50 since the same concept with resnet101 but less layers). It is noticeable that resnet50 has 4 blocks with each contains [3,4,6,3] units. And here is a diagram of resnet50, there you see the 4 blocks.

So we are done with the resnet part, those features extracted by the first stage feature extractor (resnet101) will be fed to the proposal generator and it will generate regions, these regions together with the features, will then be fed into the box classifier for class prediction and bbox regression.

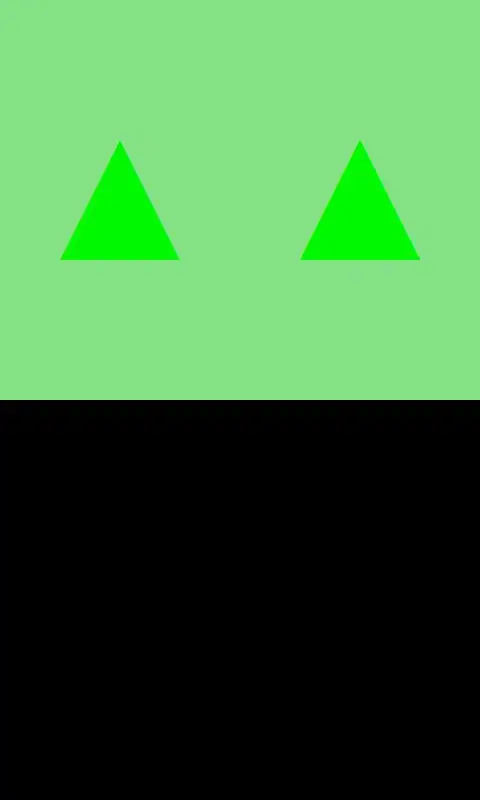

The faster_rcnn part, is specified as meta_architectures, meta_architectures are a receipe for converting classification architectures into detection architectures, in this case, from resnet101 to faster_rcnn. Here is a diagram of faster_rcnn_meta_architecture (source).

Here you see in the box classifier part, there are also pooling operations (for the cropped region) and convolutional operations (for extracting features from the cropped region). And in the class faster_rcnn_meta_arch, this line is the maxpool operation and the later convolution operation is performed in the feature extractor class again, but for the second stage. And you can clearly see another block being used.

def _extract_box_classifier_features(self, proposal_feature_maps, scope):

"""Extracts second stage box classifier features.

Args:

proposal_feature_maps: A 4-D float tensor with shape

[batch_size * self.max_num_proposals, crop_height, crop_width, depth]

representing the feature map cropped to each proposal.

scope: A scope name (unused).

Returns:

proposal_classifier_features: A 4-D float tensor with shape

[batch_size * self.max_num_proposals, height, width, depth]

representing box classifier features for each proposal.

"""

with tf.variable_scope(self._architecture, reuse=self._reuse_weights):

with slim.arg_scope(

resnet_utils.resnet_arg_scope(

batch_norm_epsilon=1e-5,

batch_norm_scale=True,

weight_decay=self._weight_decay)):

with slim.arg_scope([slim.batch_norm],

is_training=self._train_batch_norm):

blocks = [

resnet_utils.Block('block4', resnet_v1.bottleneck, [{

'depth': 2048,

'depth_bottleneck': 512,

'stride': 1

}] * 3)

]

proposal_classifier_features = resnet_utils.stack_blocks_dense(

proposal_feature_maps, blocks)

return proposal_classifier_features