if NPF drops a packet (packets) it means that packets will not be captured or that packets will not be sent/received?

The packet will not be captured, but the packet will still be delivered to the rest of the network stack. This is, I suppose, for two reasons:

Packet capture tools tend to be used for diagnostics, so they tend to have a philosophy of "don't make things worse". All the packet capture tools I know will choose to let packets continue to flow past them, even if they are unable to keep up.

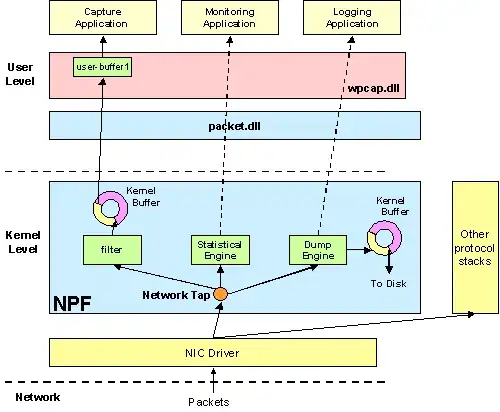

NPF (aka winpcap / wireshark) in particular is architected in a way that prevents it from blocking/dropping traffic. Even if you were willing to make a few modifications to NPF, you cannot do it. The reason is that NPF is implemented as a protocol driver. As a protocol driver, it's a peer of TCPIP, and cannot directly interfere with what TCPIP does. (It's a small miracle that NPF can even see what TCPIP transmits -- this is done through the magic of layer-2-loopback. [Not related to layer-3-loopback, like ::1 and 127.0.0.1 etc.] )

The nmap project has a fork of NPF that implements it as an NDIS filter driver. That kind of driver is capable of blocking, delaying, rewriting, or injecting traffic. So if you're interested in changing philosophy #1 above, you should start with nmap's fork and not the "official" winpcap.

(And, in general, I personally would recommend nmap's fork, even if you don't need to drop traffic. Filter drivers will be much faster than a protocol driver that puts the network adapter into layer-2-loopback mode.)

Once you look at nmap-npf, you'll be able to find the callbacks from the NDIS sample filter driver, like FilterReceiveNetBufferLists.

Dropping packets is actually pretty easy ;) There's some gotchas, though, so let's look at some examples.

On the transmit path, we have a linked list of NBLs, and we want to separate it into two lists, one to drop and one to continue sending. A single NBL can contain multiple packets, but each packet is guaranteed to be of the same "stream" (e.g., TCP socket). So usually you can make the simplifying assumption that every packet in the NBL always gets treated the same way: if you want to drop one, you want to drop them all.

If this assumption is not true, i.e., if you do want to selectively drop some packets from within a TCP socket, but not all packets, then you need to do something more complicated. You can't directly remove a single NET_BUFFER from a NET_BUFFER_LIST; instead you must clone the NET_BUFFER_LIST and copy down the NET_BUFFERs that you want to keep.

Since this is a free forum, I'm only going to give you an example of the simple and common case ;)

void

FilterSendNetBufferLists(NET_BUFFER_LIST *nblChain, ULONG sendFlags)

{

NET_BUFFER_LIST *drop = NULL;

NET_BUFFER_LIST *keep = NULL;

NET_BUFFER_LIST *next = NULL;

NET_BUFFER_LIST *nbl = NULL;

for (nbl = nblChain; nbl != NULL; nbl = next) {

next = nbl->Next;

// If the first NB in the NBL is drop-worthy, then all NBs are

if (MyShouldDropPacket(nbl->FirstNetBuffer)) {

nbl->Next = drop;

drop = nbl;

nbl->Status = NDIS_STATUS_FAILURE; // tell the protocol

} else {

nbl->Next = keep;

keep = nbl;

}

}

// Above would reverse the order of packets; let's undo that here.

keep = ReverseNblChain(keep);

. . . do something with the NBLs you want to keep. . .;

// Send the keepers down the stack to be transmitted by the NIC.

NdisFSendNetBufferLists(context, keep, portNumber, sendFlags);

// Return the dropped packets back up to whoever tried to send them.

NdisFSendCompleteNetBufferLists(context, drop, 0);

}

On the receive path, you're guaranteed that there's only one NET_BUFFER per NET_BUFFER_LIST. (The NIC can't fully know which packets are part of the same stream, so no grouping has been done yet.) So that little gotcha is gone, but there's a new gotcha: you must check the NDIS_RECEIVE_FLAGS_RESOURCES flag. Not checking this flag is the #1 cause of lost time chasing bugs in filter drivers, so I have to make a big deal about it.

void

FilterReceiveNetBufferLists(NET_BUFFER_LIST *nblChain, ULONG count, ULONG receiveFlags)

{

NET_BUFFER_LIST *drop = NULL;

NET_BUFFER_LIST *keep = NULL;

NET_BUFFER_LIST *next = NULL;

NET_BUFFER_LIST *nbl = NULL;

for (nbl = nblChain; nbl != NULL; nbl = next) {

next = nbl->Next;

// There's only one packet in the NBL

if (MyShouldDropPacket(nbl->FirstNetBuffer)) {

nbl->Next = drop;

drop = nbl;

count -= 1; // decrement the NumberOfNetBufferLists

} else {

nbl->Next = keep;

keep = nbl;

}

}

keep = ReverseNblChain(keep);

. . . do something with the NBLs you want to keep. . .;

// Pass the keepers up the stack to be processed by protocols.

NdisFIndicateReceiveNetBufferLists(context, keep, portNumber, count, receiveFlags);

// Checking this flag is critical; never ever call

// NdisFReturnNetBufferLists if the flag is set.

if (0 == (NDIS_RECEIVE_FLAGS_RESOURCES & receiveFlags)) {

NdisFReturnNetBufferLists(context, keep, 0);

}

}

Note that I used a helper function called ReverseNblChain. It's technically legal to reverse the order of packets, but it kill performance. TCPIP can only reach its best performance when packets usually arrive in order. The linked-list manipulation loop in the sample code has the side-effect of reversing the list of NBLs, so we undo the damage with ReverseNblChain. We don't need to reverse the drop chain, since nobody tries to reassemble dropped packets; you can leave them in any order.

NET_BUFFER_LIST * ReverseNblChain(NET_BUFFER_LIST *nblChain)

{

NET_BUFFER_LIST *head = NULL;

NET_BUFFER_LIST *next = NULL;

NET_BUFFER_LIST *nbl = NULL;

for (nbl = nblChain; nbl != NULL; nbl = next) {

next = nbl->Next;

nbl->Next = head;

head = nbl;

}

return head;

}

Finally, if you're reading this from a years in the future, I suggest you look for a sample header file from Microsoft named nblutil.h. (We haven't published it yet, but I'm working on it.) It has a very nice routine named ndisClassifyNblChain which does almost all of the work for you. It is designed for high scalability, and uses several tricks for better perf than what you'll find crammed into an already-overlong StackOverflow answer.

Update from the future: https://github.com/microsoft/ndis-driver-library has NdisClassifyNblChain2