This is most likely due to the original formatting in Excel (specifically the Accounting and "Comma Style" number formats do this, because it justifies the currency symbol or sign as part of the formatting). In these cases, you'll also notice that pasting from Excel includes a leading and trailing whitespace character.

Dataprep doesn't spend too much time thinking for you—in this case, they take the conservative angle of giving you the raw data and letting you decide whether you need to reformat it.

To confirm that Dataprep isn't misbehaving, you only need to open the CSV in a text editor—you'll most likely see those same quoted strings. This is also common when other systems generate CSVs with number formatting applied (forcing the values to be quoted strings in the CSV). Similarly, any text columns containing commas will typically be quoted (as required, since this is typically the delimiter and has special meaning).

Thankfully, this is an easy fix. You'll also have to replace the commas if you want it as a Decimal type.

Simple Replacements:

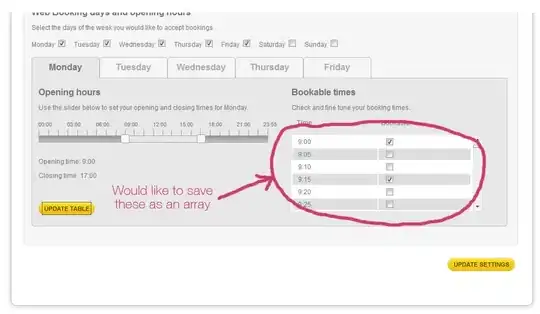

Interface:

- Format > Trim Leading & Trailing Quotes

- Format > Trim Leading & Trailing Whitespace

- Replace > Text or Pattern (replace ","; make sure you check the "Match all occurrences box

Resulting Wrangle script:

textformat col: col1 type: trimquotes

textformat col: col1 type: trimwhitespace

replacepatterns col: col1 with: '' on: ',' global: true

Regular Expression (1 step replacement):

replacepatterns col: col1 with: '' on: /[^0-9.]/ global: true

In a mixed team with folks who don't know regular expressions, the former is sometimes a bit clearer and less intimidating—but otherwise it's just a lot easier to do in a single step.