We've recently crossed 130K documents in one of our collection. Since then we're facing higher memory consumption issue with nodejs. We're using sails waterline.js orm for querying mongodb. So any call made to db through waterline api for example Model.create triggers the increment and node process keeps consuming ram until ~1.8GB then it blows up and restarts. I am trying to debug issue for past week. And I could not find any solution. Please help.

When I deleted all collection data the server does not show any memory consumption. But bringing back the 130K docs creates the issue again.

For ex - I have a user registration endpoint /user

It calls following models in row

let user = await User.create(data);

let model2 = await Model2.create(userdata);

let model3 = await Model3.create(model2Data)

let model4 = await Model4.create(data2);

Take a note all these models does not have too many data. The model which has 130K data is different model.

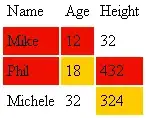

I took heap dump of previous and later state of node vm. Examining in chrome dev tools I find there are lots of db data loaded into memory (underlined in image. Those data belongs to different model/collection called estimates) but our endpoint

Examining in chrome dev tools I find there are lots of db data loaded into memory (underlined in image. Those data belongs to different model/collection called estimates) but our endpoint /user never calls or interact those models. So I suppose it's waterline or something else.