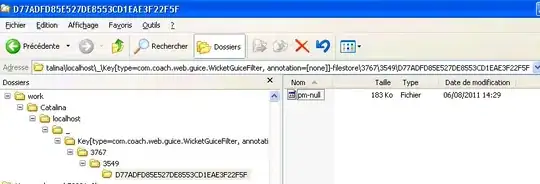

I'm looking to convert JSON with an array to csv format. The number of elements inside the array is dynamic for each row. I tried using this flow, ( attached the flow file xml on the post ).

GetFile --> ConvertRecord --> UpdateAttribute --> PutFile

Are there any other alternatives?

JSON format:

{ "LogData": {

"Location": "APAC",

"product": "w1" }, "Outcome": [

{

"limit": "0",

"pri": "3",

"result": "pass"

},

{

"limit": "1",

"pri": "2",

"result": "pass"

},

{

"limit": "5",

"priority": "1",

"result": "fail"

} ], "attr": {

"vers": "1",

"datetime": "2018-01-10 00:36:00" }}

Expected output in csv:

location, product, limit, pri, result, vers, datetime

APAC w1 0 3 pass 1 2018-01-10 00:36:00

APAC w1 1 2 pass 1 2018-01-10 00:36:00

APAC w1 5 1 fail 1 2018-01-10 00:36:00

Output from the attached flow: LogData,Outcome,attr "MapRecord[{product=w1, Location=APAC}]","[MapRecord[{limit=0, result=pass, pri=3}], MapRecord[{limit=1, result=pass, pri=2}], MapRecord[{limit=5, result=fail}]]","MapRecord[{datetime=2018-01-10 00:36:00, vers=1}]"

ConvertRecord -- I am using JSONTreereader and CSVRecordSSetwriter configurations as below:

JSONTreereader Controler service config:

CSVRecordSetwriter controller service config:

CSVRecordSetwriter controller service config:

AvroschemaRegistry Controller service config:

AvroschemaRegistry Controller service config:

Avro schema : { "name": "myschema", "type": "record", "namespace": "myschema", "fields": [{"name": "LogData","type": { "name": "LogData", "type": "record", "fields": [{ "name": "Location", "type": "string"},{ "name": "product", "type": "string"} ]}},{"name": "Outcome","type": { "type": "array", "items": {"name": "Outcome_record","type": "record","fields": [ {"name": "limit","type": "string" }, {"name": "pri","type": ["string","null"] }, {"name": "result","type": "string" }] }}},{"name": "attr","type": { "name": "attr", "type": "record", "fields": [{ "name": "vers", "type": "string"},{ "name": "datetime", "type": "string"} ]}} ]}