Use a regex & spacy's merge/retokenize method to merge the content in parentheses as a single token.

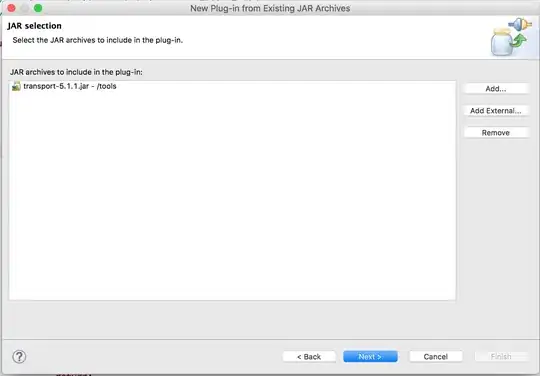

>>> import spacy

>>> import re

>>> my_str = "There are three things in air, Nitrogen (79%), oxygen (20%), and other types of gases (1%)."

>>> nlp = spacy.load('en')

>>> parsed = nlp(my_str)

>>> [(x.text,x.pos_) for x in parsed]

[('There', 'ADV'), ('are', 'VERB'), ('three', 'NUM'), ('things', 'NOUN'), ('in', 'ADP'), ('air', 'NOUN'), (',', 'PUNCT'), ('Nitrogen', 'PROPN'), ('(', 'PUNCT'), ('79', 'NUM'), ('%', 'NOUN'), (')', 'PUNCT'), (',', 'PUNCT'), ('oxygen', 'NOUN'), ('(', 'PUNCT'), ('20', 'NUM'), ('%', 'NOUN'), (')', 'PUNCT'), (',', 'PUNCT'), ('and', 'CCONJ'), ('other', 'ADJ'), ('types', 'NOUN'), ('of', 'ADP'), ('gases', 'NOUN'), ('(', 'PUNCT'), ('1', 'NUM'), ('%', 'NOUN'), (')', 'PUNCT'), ('.', 'PUNCT')]

>>> indexes = [m.span() for m in re.finditer('\([\w%]{0,5}\)',my_str,flags=re.IGNORECASE)]

>>> indexes

[(40, 45), (54, 59), (86, 90)]

>>> for start,end in indexes:

... parsed.merge(start_idx=start,end_idx=end)

...

(79%)

(20%)

(1%)

>>> [(x.text,x.pos_) for x in parsed]

[('There', 'ADV'), ('are', 'VERB'), ('three', 'NUM'), ('things', 'NOUN'), ('in', 'ADP'), ('air', 'NOUN'), (',', 'PUNCT'), ('Nitrogen', 'PROPN'), ('(79%)', 'PUNCT'), (',', 'PUNCT'), ('oxygen', 'NOUN'), ('(20%)', 'PUNCT'), (',', 'PUNCT'), ('and', 'CCONJ'), ('other', 'ADJ'), ('types', 'NOUN'), ('of', 'ADP'), ('gases', 'NOUN'), ('(1%)', 'PUNCT'), ('.', 'PUNCT')]