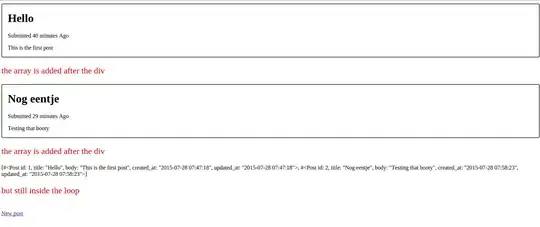

I have some videos that are to be considered as ground truths for people detection: this is an example.

I also have the staple video (without any detections) and I have to run my people detector algorithm on it and compare my results with the ground truth video.

The problem is that I would like to have not only a qualitative comparison, but also a quantitative. So, as far as I am able to count the number of detections in my personal algorithm, I must find a reliable way to count the number of bounding boxes that appear in the ground truth video for each frame.

I have taken into account this link and this one either, but they are meant to find the contours of a shape, not a bounding box. I know it could sound non-sense to detect the number of detections, but this is the only way I have to get a numerical ground truth.