I am trying to have Graphviz display my oneHotEncoded categorical data but I can't get it to work.

Here is my X data with theses columns:

Category, Size, Type, Rating, Genre, Number of versions

['ART_AND_DESIGN' '6000000+' 'Free' 'Everyone' 'Art & Design' '7']

['ART_AND_DESIGN' '6000000+' 'Free' 'Everyone' 'Art & Design' '2']

...

['FAMILY' '20000000+' 'Free' 'Everyone' 'Art & Design' '13']

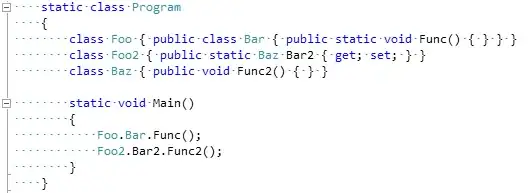

And my code sample:

X = self.df.drop(['Installs'], axis=1).values

y = self.df['Installs'].values

self.oheFeatures = OneHotEncoder(categorical_features='all')

EncodedX = self.oheFeatures.fit_transform(X).toarray()

self.oheY = OneHotEncoder()

EncodedY = self.oheY.fit_transform(y.reshape(-1,1)).toarray()

self.X_train, self.X_test, self.y_train, self.y_test = train_test_split(EncodedX, EncodedY, test_size=0.25, random_state=33)

clf = DecisionTreeClassifier(criterion='entropy', min_samples_leaf=100)

clf.fit(self.X_train, self.y_train)

tree.export_graphviz(clf, out_file=None,

feature_names=self.oheFeatures.get_feature_names(),

class_names=self.oheY.get_feature_names(),

filled=True,

rounded=True,

special_characters=True)

Dot_data = tree.export_graphviz(clf, out_file=None)

graph = graphviz.Source(dot_data)

graph.render("applications")

But when I try to visualize the output result, I get the decision tree of the encoded data:

Is there any way to have graphviz display the "decoded" data instead?