I have been optimizing a ray tracer, and to get a nice speed up, I used OpenMP generally like follows (C++):

Accelerator accelerator; // Has the data to make tracing way faster

Rays rays; // Makes the rays so they're ready to go

#pragma omp parallel for

for (int y = 0; y < window->height; y++) {

for (int x = 0; x < window->width; x++) {

Ray& ray = rays.get(x, y);

accelerator.trace(ray);

}

}

I gained 4.85x performance on a 6 core/12 thread CPU. I thought I'd get more than that, maybe something like 6-8x... especially when this eats up >= 99% of the processing time of the application.

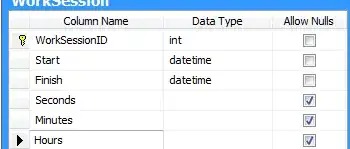

I want to find out where my performance bottleneck is, so I opened VTune and profiled. Note that I am new to profiling, so maybe this is normal but this is the graph I got:

In particular, this is the 2nd biggest time consumer:

where the 58% is the microarchitecture usage.

Trying to solve this on my own, I went looking for information on this, but the most I could find was on Intel's VTune wiki pages:

Average Physical Core Utilization

Metric Description

The metric shows average physical cores utilization by computations of the application. Spin and Overhead time are not counted. Ideal average CPU utilization is equal to the number of physical CPU cores.

I'm not sure what this is trying to tell me, which leads me to my question:

Is this normal for a result like this? Or is something going wrong somewhere? Is it okay to only see a 4.8x speedup (compared to a theoretical max of 12.0) for something that is embarrassingly parallel? While ray tracing itself can be unfriendly due to the rays bouncing everywhere, I have done what I can to compact the memory and be as cache friendly as possible, use libraries that utilize SIMD for calculations, done countless implementations from the literature to speed things up, and avoided branching as much as possible and do no recursion. I also parallelized the rays so that there's no false sharing AFAIK, since each row is done by one thread so there shouldn't be any cache line writing for any threads (especially since ray traversal is all const). Also the framebuffer is row major, so I was hoping false sharing wouldn't be an issue from that.

I do not know if a profiler will pick up the main loop that is threaded with OpenMP and this is an expected result, or if I have some kind of newbie mistake and I'm not getting the throughput that I want. I also checked that it spawns 12 threads, and OpenMP does.

I guess tl;dr, am I screwing up using OpenMP? From what I gathered, the average physical core utilization is supposed to be up near the average logical core utilization, but I almost certainly have no idea what I'm talking about.