I have some problems with figuring out why you need to revisit all time steps from an episode on each horizon advance for the On-Line version of the λ-return algorithm from the book:

Reinforcement Learning: An Introduction, 2nd Edition, Chapter 12, Sutton & Barto

Here all sequences of weight vectors W1, W2,..., Wh for each horizon h start from W0(the weights from the end of the previous episode). However they do not seem to depend on the returns/weights from the previous horizon and can be calculated independently. This appears to me explained like that just for clarification and you can calculate them only for the final horizon h=T at episode termination. This will be the same what is done for the Off-line version of the algorithm and the actual update rule is:

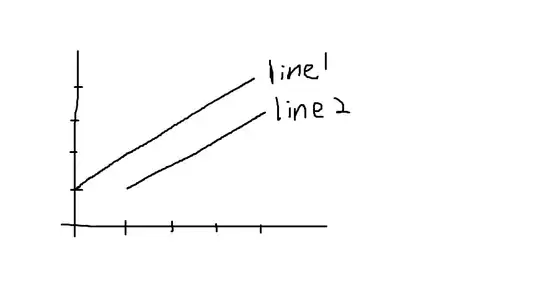

Not surprisingly I get exactly the same results for the 2 algorithms on the 19-states Random Walk example:

In the book it is mentioned that the on-line version should perform a little bit better and for that case it should have the same results as the True Online TD(λ). When implementing the latter it really outperforms the off-line version but I can't figure it out for the simple and slow on-line version.

Any suggestions will be appreciated.

Thank you