I have trained a binary classifier, but I think that my ROC curve is incorrect.

This is the vector that contains labels:

y_true= [0, 1, 1, 1, 0, 1, 0, 1, 0]

and the second vector is the score vector

y_score= [

0.43031937, 0.09115553, 0.00650781, 0.02242869, 0.38608587,

0.09407699, 0.40521139, 0.08062053, 0.37445426

]

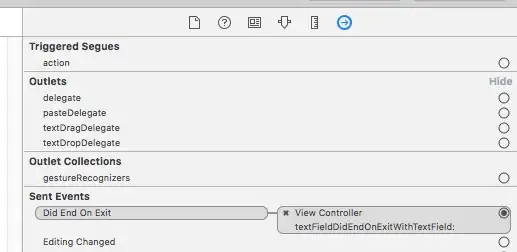

When I plot my ROC curve, I get the following:

I think the code is correct, but I don't understand why I'm getting this curve and why the tpr, fpr, and threshold lists are of length 4. Why is my AUC is equal to zero?

fpr [0. 0.25 1. 1. ]

tpr [0. 0. 0. 1.]

thershold [1.43031937 0.43031937 0.37445426 0.00650781]

My Code:

import sklearn.metrics as metrics

fpr, tpr, threshold = metrics.roc_curve(y_true, y_score)

roc_auc = metrics.auc(fpr, tpr)

# method I: plt

import matplotlib.pyplot as plt

plt.title('Receiver Operating Characteristic')

plt.plot(fpr, tpr, 'b', label = 'AUC = %0.2f' % roc_auc)

plt.legend(loc = 'lower right')

plt.plot([0, 1], [0, 1],'r--')

plt.xlim([0, 1])

plt.ylim([0, 1])

plt.ylabel('True Positive Rate')

plt.xlabel('False Positive Rate')

plt.show()