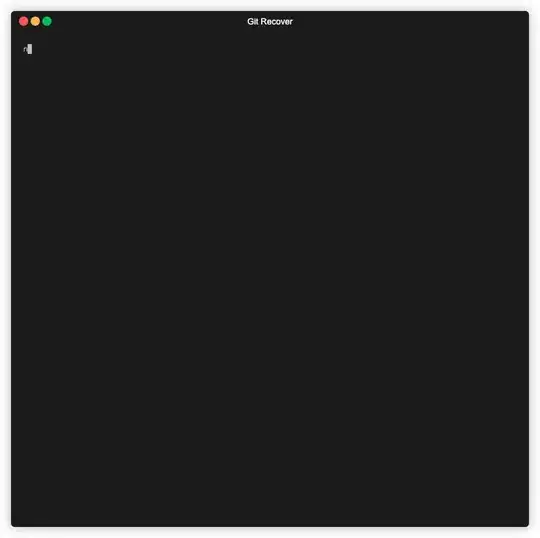

I was able to get a better contour of my object by switching from color recognition and analysis over the RGB image to edge detection on the depth information from my camera.

Here are the general steps I took to find a better edge map.

Save my depth information in an NxMx1 matrice. Where N,M values is the shape of my image's resolution. For a 480,640 image, I had a matrix that was (480,640,1), where each pixel (i,j) stored corresponding depth value for that pixel coordinate.

Used a 2D Gaussian kernel to smooth and fill in any missing data in my depth matrix, using astropy's convolve method.

Find the gradient of my depth matrix and the corresponding magnitude of each pixel in the gradient.

Filter out data based on uniform depth. Where uniform depth would imply a flat object so I found the Gaussian distribution for my magnitudes (from depth gradient) and those that fill within X standard deviations were set to zero. This reduced some additional noise in the image.

Then I normalized the values of my magnitude matrix from 0 to 1 so my matrix could be considered a channel 1 image matrix.

So since my depth matrix was of the form (480,640,1) and when I found my corresponding gradient matrix which was also (480,640,1) then I scaled the values (:,:,1) to go from 0 to 1. This way I could represent it as grayscale or binary image later on.

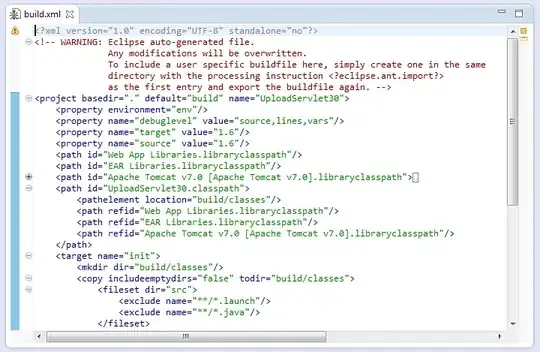

def gradient_demo(self, Depth_Mat):

"""

Gradient display entire image

"""

shape = (Depth_Mat.shape)

bounds = ( (0,shape[0]), (0, shape[1]) )

smooth_depth = self.convolve_smooth_Depth(Depth_Mat, bounds)

gradient, magnitudes = self.depth_gradient(smooth_depth, bounds)

magnitudes_prime = magnitudes.flatten()

#hist, bin = np.histogram(magnitudes_prime, 50) # histogram of entire image

mean = np.mean(magnitudes_prime)

variance = np.var(magnitudes_prime)

sigma = np.sqrt(variance)

# magnitudes_filtered = magnitudes[(magnitudes > mean - 2 * sigma) & (magnitudes < mean + 2 * sigma)]

magnitudes[(magnitudes > mean - 1.5 * sigma) & (magnitudes < mean + 1.5 * sigma)] = 0

magnitudes = 255*magnitudes/(np.max(magnitudes))

magnitudes[magnitudes != 0] = 1

plt.title('magnitude of gradients')

plt.imshow(magnitudes, vmin=np.nanmin(magnitudes), vmax=np.amax(magnitudes), cmap = 'gray')

plt.show()

return magnitudes.astype(np.uint8)

def convolve_smooth_Depth(self, raw_depth_mtx, bounds):

"""

Iterate over subimage and fill in any np.nan values with averages depth values

:param image:

:param bounds: ((ylow,yhigh), (xlow, xhigh)) -> (y,x)

:return: Smooted depth values for a given square

"""

ylow, yhigh = bounds[0][0], bounds[0][1]

xlow, xhigh = bounds[1][0], bounds[1][1]

kernel = Gaussian2DKernel(1) #generate kernel 9x9 with stdev of 1

# astropy's convolution replaces the NaN pixels with a kernel-weighted interpolation from their neighbors

astropy_conv = convolve(raw_depth_mtx[ylow:yhigh, xlow:xhigh], kernel, boundary='extend')

# extended boundary assumes original data is extended using a constant extrapolation beyond the boundary

smoothedSQ = (np.around(astropy_conv, decimals= 3))

return smoothedSQ

def depth_gradient(self, smooth_depth, bounds):

"""

:param smooth_depth:

:param shape: Tuple with y_range and x_range of the image.

shape = ((0,480), (0,640)) (y,x) -> (480,640)

y_range = shape[0]

x_range = shape[1]

:return:

"""

#shape defines the image array shape. Rows and Cols for an array

ylow, yhigh = bounds[0][0], bounds[0][1]

xlow, xhigh = bounds[1][0], bounds[1][1]

gradient = np.gradient(smooth_depth)

x,y = range(xlow, xhigh), range(ylow, yhigh)

xi, yi = np.meshgrid(x, y)

magnitudes = np.sqrt(gradient[0] ** 2 + gradient[1] ** 2)

return gradient, magnitudes

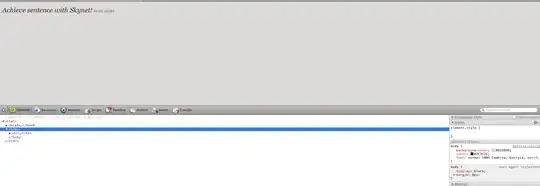

Using this method/code I was able to get the following image. Just FYI I changed the scene a little bit.

I asked another related question here: How to identify contours associated with my objects and find their geometric centroid

That shows how to find contours, centroids of my object in the image.