Suppose I have the following data in a csv file:

'"tom","jones","hello,\nMy name is tom"\x01\n"sarah","smith","hello"\x01\n'

The line terminator is \x01\n. Would it be possible to load this directly into GCS (without first pre-formatting it)? My thinking process was to do:

- Load it into CSV using a non-standard separator (such as

\x00ff) to get all data on one line. - Then do basic DMLs to 'clean up' the data and reformat it.

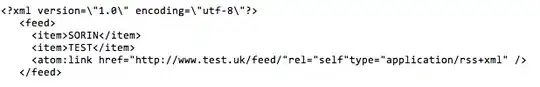

However, we run into an issue when we have run-on lines, as BQ doesn't 'support' (if you want to call it that) row ordering. Here is what my data looks like in BQ now:

As we can see the line orderings don't work, so it'd be impossible to 'recombine the data', for example, with a UDF to get the correct csv data we need.

Are there any other possible approaches here? Just to clarify, I'm looking to transform a CSV file that is already on GCS via BigQuery, without having to download that file to a separate server for processing before loading into BQ.

For reference, here is the code I'm currently using:

# /tmp/schema_external_nonstandard_csv.json

{

"schema": {

"fields": [

{

"name": "data",

"type": "STRING"

}

]

},

"sourceFormat": "CSV",

"sourceUris": [

"gs://XY-bq/ns.csv"

],

"csvOptions": {

"fieldDelimiter": "\u00ff",

"quote": ""

},

"maxBadRecords": 1000000

}

$ bq mk --external_table_definition=/tmp/schema_external_nonstandard_csv.json datadocs-163219:bqtesting.ns

$ bq query --nouse_legacy_sql 'CREATE TABLE `XY-163219.bqtesting.ns1` AS select * from `XY-163219.bqtesting.ns`'