Here's an example of one specific approach using fake data. If you have more specific requirements, those should be added to your question.

First I'll make some fake data with 30 columns. For example's sake, I'll make each column have random numbers with a mean and sd corresponding to the column. The first column will have an average and sd of 1, the 2nd an average and sd of 2, etc.

columns = 30

samples = 100000

library(tidyverse)

set.seed(42)

my_data <- data_frame(row = rep(1:samples, times = columns),

col = rep(1:columns, each = samples),

value = rnorm(samples*columns, mean = col, sd = col)) %>%

mutate(col = paste0("c", str_pad(col, 2, pad = "0"))) %>%

spread(col, value)

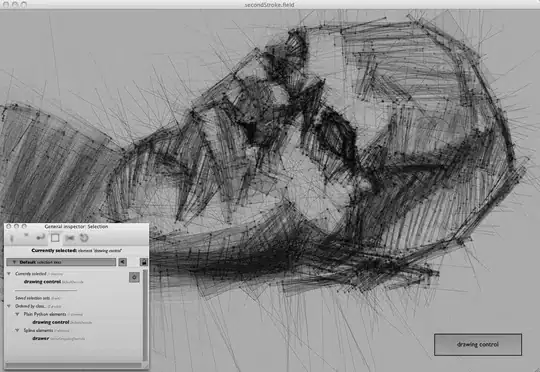

Here I'll confirm that each row has the average and dispersion I expect:

my_data %>%

gather(col, value, -row) %>%

sample_n(10000) %>% # 10k dots is enough and is quicker to plot than all of them.

ggplot(aes(col, value)) +

geom_point(alpha = 0.1, size = 0.5, color = "gray40") +

geom_boxplot(fill = NA, outlier.shape = NA) +

scale_y_continuous(breaks = 60*-2:2)

For this approach, I'll rank the rows based on how far they are from the average, and keep the ones that are closest. I'll define "closest to average" based on which row has the lowest RMS difference from the averages.

Here I'll calculate how far each point is from the average for its column, then for each row find the average RMS absolute difference across all columns. I'll keep the top 5 in a data frame called most_typical, and the bottom 5 in least_typical.

my_data_how_typical <- my_data %>%

gather(col, value, -row) %>% # convert to long format

group_by(col) %>% # group by column

mutate(dist_abs = value - mean(value)) %>% # calc dist from mean for that col

ungroup() %>%

group_by(row) %>%

summarize(avg_dist_abs = sqrt(mean(dist_abs ^ 2)))

most_typical <- top_n(my_data_how_typical, 5, wt = -avg_dist_abs)

least_typical <- top_n(my_data_how_typical, 5, wt = avg_dist_abs)

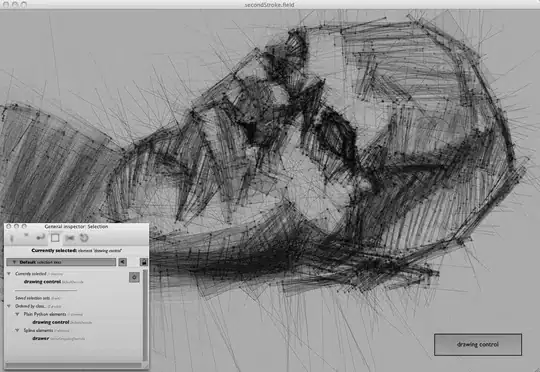

Now I'll plot the top five most and least typical against the whole. The green most_typical rows tend to stay near the averages, while the least_typical have some extreme values. By keeping the rows with the least variance from average (you could keep 1000 instead of just 5), you'll end up with a list that will have averages close to the overall averages. But that list will necessarily also have a lot less variance than then original data, since it intentionally excludes rows with extreme values.

my_data %>%

gather(col, value, -row) %>%

sample_n(10000) %>%

ggplot(aes(col, value)) +

geom_point(alpha = 0.1, size = 0.5, color = "gray40") +

geom_line(data = least_typical %>%

select(row) %>%

left_join(my_data, by = c("row" = "row")) %>%

gather(col, value, -row),

aes(col, value, group = row), color = "red") +

geom_line(data = most_typical %>%

select(row) %>%

left_join(my_data, by = c("row" = "row")) %>%

gather(col, value, -row),

aes(col, value, group = row), color = "green")

Your data will look different and your definition of "most close to average" may be different, but hopefully that will steer you in the right direction.