I'm having a lot of trouble trying to visualize the waveform of a audio file (.mp3, .wav) using canvas. Actually, pretty clueless on how to achieve this.

I'm using the Web Audio API. I've been playing around with the code on here to achieve my goal: https://developer.mozilla.org/en-US/docs/Web/API/Web_Audio_API/Visualizations_with_Web_Audio_API#Creating_a_waveformoscilloscope. Unfortunately, I'm running into problems. Examples below

How the wave form looks with canvas:

How the waveform of the song should look. (this is from Ableton)

If it matters, the audio buffer size for this song in particular is 8832992.

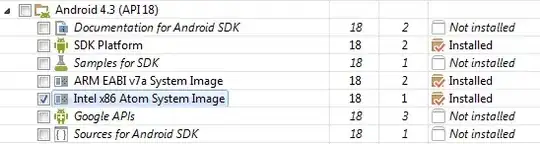

The Audio Buffer(songAudioBuffer) of this song looks like:

AudioBuffer {

duration: 184.02066666666667,

length: 8832992,

numberOfChannels: 2,

sampleRate: 48000

}

Current program flow: User selects a file from their computer -> User clicks on load -> FileReader reads the audio file and turns it into an ArrayBuffer -> Take ArrayBuffer and create an AudioBuffer

const audioFile = document.querySelector('.audioFile') // Input element where user loads the audio file to

const load = document.querySelector('.load') // button element

load.addEventListener('click', () => {

const fileReader = new FileReader();

fileReader.readAsArrayBuffer(audioFile.files[0]);

fileReader.onload = function(evt){

audioContext = new AudioContext(); // global var

audioBuffer = audioContext.decodeAudioData(fileReader.result); // global var

// Type of AudioBuffer

audioBuffer.then((res) => {

songAudioBuffer = res; // songAudioBuffer is a global var

draw()

})

.catch((err) => {

console.log(err);

})

}

})

function draw(){

const canvas = document.querySelector('.can'); // canvas element

const canvasCtx = canvas.getContext('2d');

canvas.style.width = WIDTH + 'px';

canvas.style.height = HEIGHT + 'px';

canvasCtx.fillStyle = 'rgb(260, 200, 200)';

canvasCtx.fillRect(0, 0, WIDTH, HEIGHT);

canvasCtx.lineWidth = 2;

canvasCtx.strokeStyle = 'rgb(0, 0, 0)';

canvasCtx.beginPath();

const analyser = audioContext.createAnalyser();

analyser.fftSize = 2048;

const bufferLength = songAudioBuffer.getChannelData(0).length;

var dataArray = new Float32Array(songAudioBuffer.getChannelData(0));

analyser.getFloatTimeDomainData(songAudioBuffer.getChannelData(0));

var sliceWidth = WIDTH / bufferLength;

var x = 0;

for(var i = 0; i < bufferLength; i++) {

var v = dataArray[i] * 2;

var y = v + (HEIGHT / 4); // Dividing height by 4 places the waveform in the center of the canvas for some reason

if(i === 0) canvasCtx.moveTo(x, y);

else {

canvasCtx.lineTo(x, y);

}

x += sliceWidth;

}

canvasCtx.lineTo(canvas.width, canvas.height / 2);

canvasCtx.stroke();

}