Recently we upgraded EMR release label from emr-5.16.0 to emr-5.20.0, which use Spark 2.4 instead of 2.3.1.

At first, it was terrible. Jobs started to take much more than before.

Finally, we set maximumResourcesAllocation to true (maybe it was true by default in emr-5.16) and things start to look better.

But some stages are still taking much more than before (while others take less).

i.e:

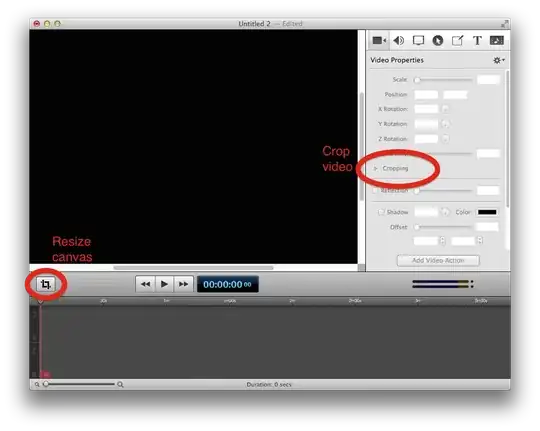

Spark 2.4:

Images from application history.

With Spark 2.4, the keyBy operation took more than 10x what it took with Spark 2.3.1.

It is related to the number of partitions. In spark 2.3.1, the number of partitions of the RDD after mapToPair operation was 5580, while in Spark 2.4 was the default parallelism of the cluster (128 because it was running in a 64 cores cluster).

I tried forcing a repartition to 10000 and the keyBy stage ended in only 1.2 minutes. But I think is not a good nor definitive solution.

Is this a known issue? Should I try to set default parallelism to a bigger quantity?