Update #6 Discovered I was accessing RGB values improperly. I assumed I was accessing data from an Int[], but was instead accessing byte information from a Byte[]. Changed to accessing from Int[] and get the following image:

Update #5 Adding code used to get RGBA ByteBuffer for reference

private void screenScrape() {

Log.d(TAG, "In screenScrape");

//read pixels from frame buffer into PBO (GL_PIXEL_PACK_BUFFER)

mSurface.queueEvent(new Runnable() {

@Override

public void run() {

Log.d(TAG, "In Screen Scrape 1");

//generate and bind buffer ID

GLES30.glGenBuffers(1, pboIds);

checkGlError("Gen Buffers");

GLES30.glBindBuffer(GLES30.GL_PIXEL_PACK_BUFFER, pboIds.get(0));

checkGlError("Bind Buffers");

//creates and initializes data store for PBO. Any pre-existing data store is deleted

GLES30.glBufferData(GLES30.GL_PIXEL_PACK_BUFFER, (mWidth * mHeight * 4), null, GLES30.GL_STATIC_READ);

checkGlError("Buffer Data");

//glReadPixelsPBO(0,0,w,h,GLES30.GL_RGB,GLES30.GL_UNSIGNED_SHORT_5_6_5,0);

glReadPixelsPBO(0, 0, mWidth, mHeight, GLES30.GL_RGBA, GLES30.GL_UNSIGNED_BYTE, 0);

checkGlError("Read Pixels");

//GLES30.glReadPixels(0,0,w,h,GLES30.GL_RGBA,GLES30.GL_UNSIGNED_BYTE,intBuffer);

}

});

//map PBO data into client address space

mSurface.queueEvent(new Runnable() {

@Override

public void run() {

Log.d(TAG, "In Screen Scrape 2");

//read pixels from PBO into a byte buffer for processing. Unmap buffer for use in next pass

mapBuffer = ((ByteBuffer) GLES30.glMapBufferRange(GLES30.GL_PIXEL_PACK_BUFFER, 0, 4 * mWidth * mHeight, GLES30.GL_MAP_READ_BIT)).order(ByteOrder.nativeOrder());

checkGlError("Map Buffer");

GLES30.glUnmapBuffer(GLES30.GL_PIXEL_PACK_BUFFER);

checkGlError("Unmap Buffer");

isByteBufferEmpty(mapBuffer, "MAP BUFFER");

convertColorSpaceByteArray(mapBuffer);

mapBuffer.clear();

}

});

}

Update #4 For reference, here is the original image to compare against.

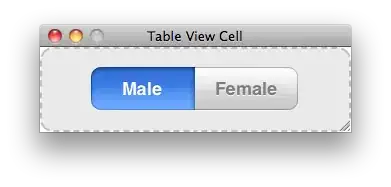

Update #3 This is the output image after interleaving all U/V data into a single array and passing it to the Image object at inputImagePlanes[1]; inputImagePlanes[2]; is unused;

The next image is the same interleaved UV data, but we load this into inputImagePlanes[2]; instead of inputImagePlanes[1];

Update #2 This is the output image after padding the U/V buffers with a zero in between each byte of 'real' data. uArray[uvByteIndex] = (byte) 0;

Update #1 As suggested by a comment, here are the row and pixel strides I get from calling getPixelStride and getRowStride

Y Plane Pixel Stride = 1, Row Stride = 960

U Plane Pixel Stride = 2, Row Stride = 960

V Plane Pixel Stride = 2, Row Stride = 960

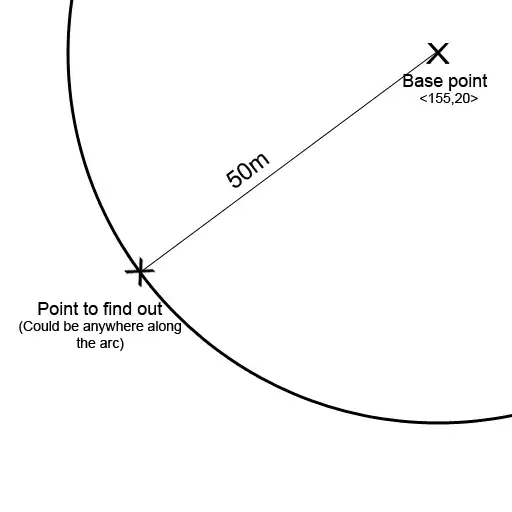

The goal of my application is to read pixels out from the screen, compress them, and then send that h264 stream over WiFi to be played be a receiver.

Currently I'm using the MediaMuxer class to convert the raw h264 stream to an MP4, and then save it to file. However the end result video is messed up and I can't figure out why. Lets walk through some of processing and see if we can find anything that jumps out.

Step 1 Set up the encoder. I'm currently taking screen images once every 2 seconds, and using "video/avc" for MIME_TYPE

//create codec for compression

try {

mCodec = MediaCodec.createEncoderByType(MIME_TYPE);

} catch (IOException e) {

Log.d(TAG, "FAILED: Initializing Media Codec");

}

//set up format for codec

MediaFormat mFormat = MediaFormat.createVideoFormat(MIME_TYPE, mWidth, mHeight);

mFormat.setInteger(MediaFormat.KEY_COLOR_FORMAT, MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420Flexible);

mFormat.setInteger(MediaFormat.KEY_BIT_RATE, 16000000);

mFormat.setInteger(MediaFormat.KEY_FRAME_RATE, 1/2);

mFormat.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL, 5);

Step 2 Read pixels out from screen. This is done using openGL ES, and the pixels are read out in RGBA format. (I've confirmed this part to be working)

Step 3 Convert the RGBA pixels to YUV420 (IYUV) format. This is done using the following method. Note that I have 2 methods for encoding called at the end of this method.

private void convertColorSpaceByteArray(ByteBuffer rgbBuffer) {

long startTime = System.currentTimeMillis();

Log.d(TAG, "In convertColorspace");

final int frameSize = mWidth * mHeight;

final int chromaSize = frameSize / 4;

byte[] rgbByteArray = new byte[rgbBuffer.remaining()];

rgbBuffer.get(rgbByteArray);

byte[] yuvByteArray = new byte[inputBufferSize];

Log.d(TAG, "Input Buffer size = " + inputBufferSize);

byte[] yArray = new byte[frameSize];

byte[] uArray = new byte[(frameSize / 4)];

byte[] vArray = new byte[(frameSize / 4)];

isByteBufferEmpty(rgbBuffer, "RGB BUFFER");

int yIndex = 0;

int uIndex = frameSize;

int vIndex = frameSize + chromaSize;

int yByteIndex = 0;

int uvByteIndex = 0;

int R, G, B, Y, U, V;

int index = 0;

//this loop controls the rows

for (int i = 0; i < mHeight; i++) {

//this loop controls the columns

for (int j = 0; j < mWidth; j++) {

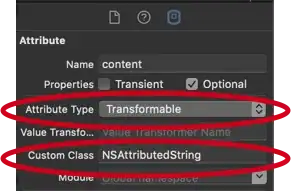

R = (rgbByteArray[index] & 0xff0000) >> 16;

G = (rgbByteArray[index] & 0xff00) >> 8;

B = (rgbByteArray[index] & 0xff);

Y = ((66 * R + 129 * G + 25 * B + 128) >> 8) + 16;

U = ((-38 * R - 74 * G + 112 * B + 128) >> 8) + 128;

V = ((112 * R - 94 * G - 18 * B + 128) >> 8) + 128;

//clamp and load in the Y data

yuvByteArray[yIndex++] = (byte) ((Y < 16) ? 16 : ((Y > 235) ? 235 : Y));

yArray[yByteIndex] = (byte) ((Y < 16) ? 16 : ((Y > 235) ? 235 : Y));

yByteIndex++;

if (i % 2 == 0 && index % 2 == 0) {

//clamp and load in the U & V data

yuvByteArray[uIndex++] = (byte) ((U < 16) ? 16 : ((U > 239) ? 239 : U));

yuvByteArray[vIndex++] = (byte) ((V < 16) ? 16 : ((V > 239) ? 239 : V));

uArray[uvByteIndex] = (byte) ((U < 16) ? 16 : ((U > 239) ? 239 : U));

vArray[uvByteIndex] = (byte) ((V < 16) ? 16 : ((V > 239) ? 239 : V));

uvByteIndex++;

}

index++;

}

}

encodeVideoFromImage(yArray, uArray, vArray);

encodeVideoFromBuffer(yuvByteArray);

}

Step 4 Encode the data! I currently have two different ways of doing this, and each has a different output. One uses a ByteBuffer returned from MediaCodec.getInputBuffer();, the other uses an Image returned from MediaCodec.getInputImage();

Encoding using ByteBuffer

private void encodeVideoFromBuffer(byte[] yuvData) {

Log.d(TAG, "In encodeVideo");

int inputSize = 0;

//create index for input buffer

inputBufferIndex = mCodec.dequeueInputBuffer(0);

//create the input buffer for submission to encoder

ByteBuffer inputBuffer = mCodec.getInputBuffer(inputBufferIndex);

//clear, then copy yuv buffer into the input buffer

inputBuffer.clear();

inputBuffer.put(yuvData);

//flip buffer before reading data out of it

inputBuffer.flip();

mCodec.queueInputBuffer(inputBufferIndex, 0, inputBuffer.remaining(), presentationTime, 0);

presentationTime += MICROSECONDS_BETWEEN_FRAMES;

sendToWifi();

}

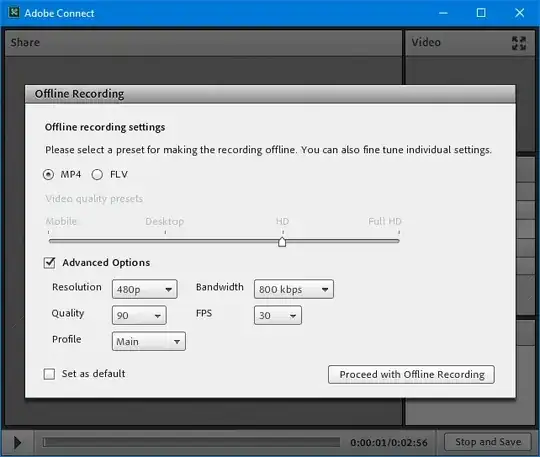

And the associated output image (note: I took a screenshot of the MP4)

Encoding using Image

private void encodeVideoFromImage(byte[] yToEncode, byte[] uToEncode, byte[]vToEncode) {

Log.d(TAG, "In encodeVideo");

int inputSize = 0;

//create index for input buffer

inputBufferIndex = mCodec.dequeueInputBuffer(0);

//create the input buffer for submission to encoder

Image inputImage = mCodec.getInputImage(inputBufferIndex);

Image.Plane[] inputImagePlanes = inputImage.getPlanes();

ByteBuffer yPlaneBuffer = inputImagePlanes[0].getBuffer();

ByteBuffer uPlaneBuffer = inputImagePlanes[1].getBuffer();

ByteBuffer vPlaneBuffer = inputImagePlanes[2].getBuffer();

yPlaneBuffer.put(yToEncode);

uPlaneBuffer.put(uToEncode);

vPlaneBuffer.put(vToEncode);

yPlaneBuffer.flip();

uPlaneBuffer.flip();

vPlaneBuffer.flip();

mCodec.queueInputBuffer(inputBufferIndex, 0, inputBufferSize, presentationTime, 0);

presentationTime += MICROSECONDS_BETWEEN_FRAMES;

sendToWifi();

}

And the associated output image (note: I took a screenshot of the MP4)

Step 5 Convert H264 Stream to MP4. Finally I grab the output buffer from the codec, and use MediaMuxer to convert the raw h264 stream to an MP4 that I can play and test for correctness

private void sendToWifi() {

Log.d(TAG, "In sendToWifi");

MediaCodec.BufferInfo mBufferInfo = new MediaCodec.BufferInfo();

//Check to see if encoder has output before proceeding

boolean waitingForOutput = true;

boolean outputHasChanged = false;

int outputBufferIndex = 0;

while (waitingForOutput) {

//access the output buffer from the codec

outputBufferIndex = mCodec.dequeueOutputBuffer(mBufferInfo, -1);

if (outputBufferIndex == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED) {

outputFormat = mCodec.getOutputFormat();

outputHasChanged = true;

Log.d(TAG, "OUTPUT FORMAT HAS CHANGED");

}

if (outputBufferIndex >= 0) {

waitingForOutput = false;

}

}

//this buffer now contains the compressed YUV data, ready to be sent over WiFi

ByteBuffer outputBuffer = mCodec.getOutputBuffer(outputBufferIndex);

//adjust output buffer position and limit. As of API 19, this is not automatic

if(mBufferInfo.size != 0) {

outputBuffer.position(mBufferInfo.offset);

outputBuffer.limit(mBufferInfo.offset + mBufferInfo.size);

}

////////////////////////////////FOR DEGBUG/////////////////////////////

if (muxerNotStarted && outputHasChanged) {

//set up track

mTrackIndex = mMuxer.addTrack(outputFormat);

mMuxer.start();

muxerNotStarted = false;

}

if (!muxerNotStarted) {

mMuxer.writeSampleData(mTrackIndex, outputBuffer, mBufferInfo);

}

////////////////////////////END DEBUG//////////////////////////////////

//release the buffer

mCodec.releaseOutputBuffer(outputBufferIndex, false);

muxerPasses++;

}

If you've made it this far you're a gentleman (or lady!) and a scholar! Basically I'm stumped as to why my image is not coming out properly. I'm relatively new to video processing so I'm sure I'm just missing something.