I have developed a small reinforcement learning exercise. The problem is that the accuracy of the training drops enormously after restarting the training which I don't really understand.

The environment: - I use keras rl, a simple neuronal model, DQNAgent

from keras.models import Sequential

from keras.layers import Dense, Activation, Flatten

from keras.optimizers import Adam

from rl.agents.dqn import DQNAgent

from rl.policy import BoltzmannQPolicy

from rl.memory import SequentialMemory

model=createModel_SlotSel_drn_v2(None, env)

#Finally, we configure and compile our agent. You can use every built-in Keras optimizer and even the metrics!

memory = SequentialMemory(limit=5000000, window_length=1)

policy = BoltzmannQPolicy()

dqn = DQNAgent(model=model, nb_actions=nb_actions, memory=memory, nb_steps_warmup=130,

target_model_update=1e-3, policy=policy)

dqn.compile(Adam(lr=1e-4), metrics=['categorical_accuracy'])

...

h=dqn.fit(env, nb_steps=steps, visualize=False, verbose=1)

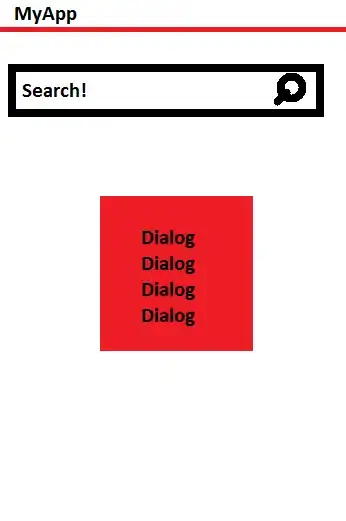

I can measure exactly the accuracy of the model, so after each 10k steps I do a measurement. At the beginning the memory is empty and the weights are all zero. The next graph visualizes the accuracy during the first 120 x 10k steps

The model learns up to a certain level and best weights are saved.

Now what I don't understand is that when I restart the training session after some days of break, I restore the weights but the memory is empty again, there is a huge drop in the accuracy of the model, and on top of this, it doesn't even reach the accuracy reached before. See next figure and the big drop at the beginning:

I thought that having restored the weights the results of the training shall not be significantly worse that before, but this is not true. An empty SequentialMemory causes a drop in the learning/training and may not lead to the same level as before.

Any hints?

Cheers, Ferenc