Will start with referencing the paper Towards Evaluating the Robustness

of Neural Networks by Carlini from page2 last paragraph: the adversary has complete access to a neural network, including the architecture and all paramaters, and can use this in a white-box manner. This is a conservative and realistic assumption: prior work has shown it is possible to train a substitute model given black-box access to a target model, and by attacking the substitute model, we can then transfer these attacks to the target model.

Making the following 2 definitions, as follow, true:

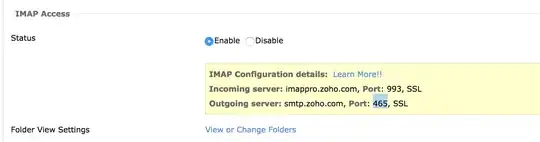

White-Box: Attackers know full knowledge about the ML algorithm, ML model, (i.e ., parameters and hyperparameters), architecture, etc. Figure below does show an example how does work white-box attacks:

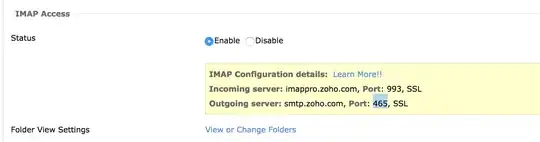

Black-Box: Attackers almost know nothing about the ML system

(perhaps know number of features, ML algorithm). Figure below points steps as an example:

Section 3.4 DEMONSTRATION OF BLACK BOX ADVERSARIAL ATTACK IN THE PHYSICAL WORLD from paper ADVERSARIAL EXAMPLES IN THE PHYSICAL WORLDby Kurakin, 2017 states the following:

Paragraph 1 page 9 describe white-box meaning: The experiments described above study physical adversarial examples under the assumption that adversary has full access to the model (i.e. the adversary knows the architecture, model weights, etc . . . ).

And following with explanation of the black-box meaning: However, the black box scenario, in which the attacker does not have access to the model, is a more realistic model of many security threats.

Conclusion: In order to define/label/classify the algorithms as white-box/black-box you just change the settings for the model.

Note: I don't classify each algorithm because some of the algorithms can support only white-box settings or only black-box settings in the cleverhanslibrary but is good start for you (if you do research than you need to check every single paper listed in the documentation to understand the GAN so you can generate on your own adversarial examples.

Resources used and interesting papers:

- BasicIterativeMethod: BasicIterativeMethod

- CarliniWagnerL2: CarliniWagnerL2

- FastGradientMethod: https://arxiv.org/pdf/1412.6572.pdf https://arxiv.org/pdf/1611.01236.pdf https://arxiv.org/pdf/1611.01236.pdf

- SaliencyMapMethod: SaliencyMapMethod

- VirtualAdversarialMethod: VirtualAdversarialMethod

- Fgsm Fast Gradient Sign Method: Fgsm Fast Gradient Sign Method

- Jsma Jacobian-based saliency map approach:JSMA in white-box setting

- Vatm virtual adversarial training: Vatm virtual adversarial training

- Adversarial Machine Learning

—

An Introduction

With slides from:

Binghui

Wang

- mnist_blackbox

- mnist_tutorial_cw