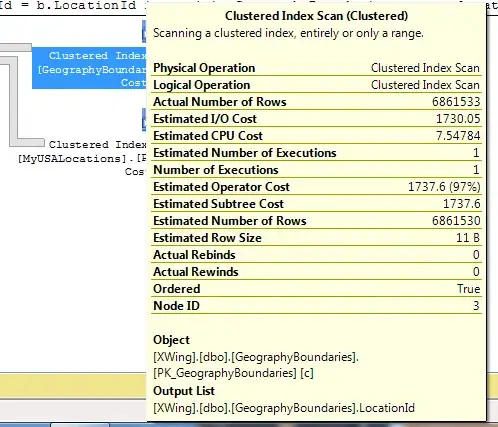

I have a typical batch job that reads CSV from cloud storage then do a bunch of join and aggregate, the whole file does not exceed 3G. But I keep getting OOM exception when writing the result back to storage, I have two executor, each has 80G of RAM, it just doesn't make sense, here is the screen shot of my spark UI and exception. And suggestion is appreciated, if my code is super sub-optimal in terms of memory, why it doesn't show up on the spark UI?

update: the source code is too convoluted to show here, but I figured out the essential cause is multiple join.

Dataset<Row> ret = something dataframe

for (String cmd : cmds) {

ret = ret.join(processDataset(ret, cmd), "primary_key")

}

so, each processDataset(ret, cmd), if you run it on its own, it's very fast, but if you have this kinda of for loop join for a lot of times, say 10 or 20 times, it gets much much much slower, and have this OOM issues.