The original answer lacks a good example that is self-contained so here it goes:

import torch

# stack vs cat

# cat "extends" a list in the given dimension e.g. adds more rows or columns

x = torch.randn(2, 3)

print(f'{x.size()}')

# add more rows (thus increasing the dimensionality of the column space to 2 -> 6)

xnew_from_cat = torch.cat((x, x, x), 0)

print(f'{xnew_from_cat.size()}')

# add more columns (thus increasing the dimensionality of the row space to 3 -> 9)

xnew_from_cat = torch.cat((x, x, x), 1)

print(f'{xnew_from_cat.size()}')

print()

# stack serves the same role as append in lists. i.e. it doesn't change the original

# vector space but instead adds a new index to the new tensor, so you retain the ability

# get the original tensor you added to the list by indexing in the new dimension

xnew_from_stack = torch.stack((x, x, x, x), 0)

print(f'{xnew_from_stack.size()}')

xnew_from_stack = torch.stack((x, x, x, x), 1)

print(f'{xnew_from_stack.size()}')

xnew_from_stack = torch.stack((x, x, x, x), 2)

print(f'{xnew_from_stack.size()}')

# default appends at the from

xnew_from_stack = torch.stack((x, x, x, x))

print(f'{xnew_from_stack.size()}')

print('I like to think of xnew_from_stack as a \"tensor list\" that you can pop from the front')

output:

torch.Size([2, 3])

torch.Size([6, 3])

torch.Size([2, 9])

torch.Size([4, 2, 3])

torch.Size([2, 4, 3])

torch.Size([2, 3, 4])

torch.Size([4, 2, 3])

I like to think of xnew_from_stack as a "tensor list"

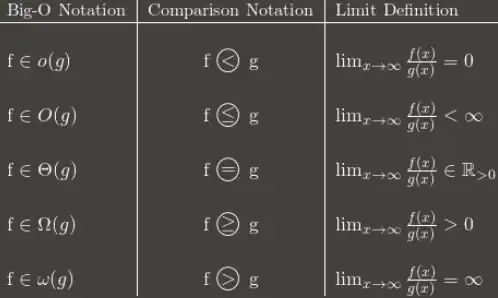

for reference here are the definitions:

cat: Concatenates the given sequence of seq tensors in the given dimension. The consequence is that a specific dimension changes size e.g. dim=0 then you are adding elements to the row which increases the dimensionality of the column space.

stack: Concatenates sequence of tensors along a new dimension. I like to think of this as the torch "append" operation since you can index/get your original tensor by "poping it" from the front. With no arguments, it appends tensors to the front of the tensor.

Related:

Update: With nested list of the same size

def tensorify(lst):

"""

List must be nested list of tensors (with no varying lengths within a dimension).

Nested list of nested lengths [D1, D2, ... DN] -> tensor([D1, D2, ..., DN)

:return: nested list D

"""

# base case, if the current list is not nested anymore, make it into tensor

if type(lst[0]) != list:

if type(lst) == torch.Tensor:

return lst

elif type(lst[0]) == torch.Tensor:

return torch.stack(lst, dim=0)

else: # if the elements of lst are floats or something like that

return torch.tensor(lst)

current_dimension_i = len(lst)

for d_i in range(current_dimension_i):

tensor = tensorify(lst[d_i])

lst[d_i] = tensor

# end of loop lst[d_i] = tensor([D_i, ... D_0])

tensor_lst = torch.stack(lst, dim=0)

return tensor_lst

here is a few unit tests (I didn't write more tests but it worked with my real code so I trust it's fine. Feel free to help me by adding more tests if you want):

def test_tensorify():

t = [1, 2, 3]

print(tensorify(t).size())

tt = [t, t, t]

print(tensorify(tt))

ttt = [tt, tt, tt]

print(tensorify(ttt))

if __name__ == '__main__':

test_tensorify()

print('Done\a')